Azure Data Factory Training In Hyderabad

with

100% Placement Assistance

- Expert Trainer with 15+ Years of Experience in Azure cloud

- Life time access for live recording videos

- More focus on practical implementation

- Cover modules like Microsoft Azure, Data Lake and Data Bricks.

- 2 months Advanced Azure Cloud training programme

- Interview questions + interview guidence

OFFER: Pay Once and attend the running batch and an additional batch at Free of Cost.

Table of Contents

ToggleAzure Data Factory Training In Hyderabad

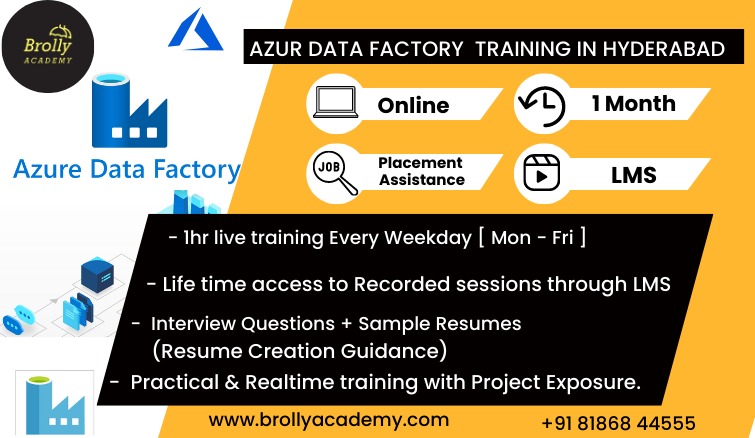

Batch Details

| Trainer Name | Mr. Karthikeyan |

| Trainer Experience | 15+ Years |

| Next Batch Date | 28 – February – 2025 (07:00 PM IST) |

| Training Modes: | Online Training |

| Course Duration: | 2 Months |

| Call us at: | +91 8186844555 |

| Email Us at: | brollyacademy@gmail.com |

| Demo Class Details: | ENROLL FOR FREE DEMO CLASS |

Azure Data Factory Training In Hyderabad

Course Contents

- Introduction to Microsoft Azure data factory

- Azure Data Factory Architecture

- Creating and Managing Data Pipelines

- Azure Data Factory Monitoring and Management

- Types of Storage Accounts

- General-purpose v2 (GPv2)

- General-purpose v1 (GPv1)

- Blob Storage Account

- FileStorage

- BlockBlobStorage

- Azure SQL Database

- Ideal Use Cases

- Data Ingestion

- Data Transformation

- Data Movement and Orchestration

- Cloud-Based Data Integration

- Data Movement and Connectivity

- Data Transformation

- Orchestration and Automation

- Integration with Azure Ecosystem

- Monitoring and Debugging

- Scalability and Performance

- Security and Compliance

- Cost-Effectiveness

- Flexible Development Options

- Serverless Workflow Automation

- Seamless Integration with ADF

- Connectivity with 300+ Services

- Conditional Logic and Control Flow

- Unified Analytics Platform

- Seamless Integration with ADF

- Advanced Data Transformation and Processing

- Support for Machine Learning and AI

- Real-Time and Batch Processing

- Scalability and Performance

- Monitoring and Debugging

- Security and Compliance

- Data Encryption

- Managed Identities and Authentication

- Requirements Gathering and Analysis

- Set Up Data Sources and Azure Resources

- Overview of Power BI

- Connecting Power BI to Data Sources

- Creating Data Models in Power BI

- Connecting to Real-Time Data

Azure Data Factory Training In Hyderabad

Key Points

Looking for the best Azure Data Factory training in Hyderabad? Brolly Academy provides complete, industry-relevant training designed to provide you with a complete understanding of Azure Data Factory (ADF) and hybrid data orchestration. With over 400 students trained and 250+ successful placements in the last 6 months, we are the trusted choice for those aiming to Grow in cloud-based data management and Azure Data Engineering.

- In-depth Course Material : Our training covers everything from data orchestration and ingestion to mastering ADF pipelines and Azure architecture. You’ll learn how to design, publish, and trigger ADF pipelines with expert guidance, ensuring you’re ready for real-world data challenges.

- Hands-on Learning : With 70% practical and 30% theoretical training, you'll gain the experience needed to excel in Azure Data Factory, including working with Azure storage containers and implementing ETL (Extract, Transform, Load) processes.

- Self-paced Learning : Access 180+ recorded sessions of the Azure Data Factory course and master key concepts at your own pace. This flexible learning approach ensures you can learn according to your schedule.

- Free Demo Session & Real-time Scenarios : Join our free demo session to get a feel for the course before committing. You'll also have access to 15+ real-time scenarios to enhance your skills and practical knowledge.

- Affordable Pricing : We offer competitive prices for the Microsoft Azure Data Factory training in Hyderabad. You can get world-class training without financial stress with different fee structures to suit your needs. Azure Data Factory training fees and why we’re the top choice for affordable, quality ADF coaching in Hyderabad.

- Job Assistance & Placement : Brolly Academy is committed to your career success. With our Azure Data Engineer training and job assistance program, you’ll receive help with interviews and placement opportunities. We provide provisional interview questions and ensure you’re well-prepared to land a rewarding role in the field.

- ADF Certification : On completing the course, you will receive the Microsoft Azure Data Factory Certification Course in Hyderabad Compilation Certificate recognized by top companies, giving you a competitive edge in the job market.

- Advanced Training : Our advanced Azure Data Factory course syllabus teaches key topics to keep your skills up-to-date with industry trends. The program focuses on practical skills to help you succeed in Azure data engineering, ensuring you're ready for the challenges in this field.

- Azure Data Factory Basics to Advanced : From data extraction and transformation to load processes, you'll gain expertise in creating data workflows in Azure.

- Graphical Interface : Azure Data Factory’s user-friendly graphical interface makes it easy to design data workflows without requiring extensive coding skills, though scripting is covered for complex scenarios.

- Live Practical Sessions : Enhance your learning with live practical sessions that give you hands-on experience working with Azure tools and storage systems.

- Course Duration & Fees : Our Microsoft Azure Data Factory course duration is flexible, allowing you to complete the training at your own pace. For specific details on the Azure Data Factory training price and fees, please contact us directly. We offer various packages that ensure affordable learning without compromising on quality.

- Get Started Today!

- Don’t miss the opportunity to join the best Azure Data Factory training in Hyderabad. Whether you're a beginner looking to learn the basics of cloud data engineering or a professional aiming to upgrade your skills, our program is designed to meet your needs.

- Contact Brolly Academy today to enroll in the Azure Data Factory online training in Hyderabad and take the first step toward a successful career in cloud data management!

Overview of Best Azure Data Factory training in Hyderabad

About

How to learn azure data factory? If you’re looking for Azure Data Factory training in Hyderabad, Brolly Academy offers practical training to build your skills in cloud-based data integration and ETL. Our Azure Data Factory training in Hyderabad, suitable for all levels, gives hands-on experience and in-depth knowledge. We provide both online and offline learning options, along with details on certification costs, making Brolly Academy a top choice among Azure Data Factory training institutes in Hyderabad.

- Up-to-date curriculum: Learn data ingestion, transformation, and advanced Azure services. Topics include creating pipelines, working with Azure Data Lake, and using the Azure Pricing Calculator.

- Industry Experts as Trainers: Our certified trainers provide practical insights and break down complex concepts for a thorough understanding of Azure Data Factory.

- Hands-on Learning: Gain confidence by working on real-world exercises, creating pipelines, and integrating ADF with other Azure services.

- Placement Assistance & ADF Certification: Receive a recognized Azure data factory certification in Hyderabad and benefit from our placement assistance program to enhance your job prospects.

- Flexible Learning Options: Choose from self-paced or instructor-led sessions. Our course duration is 8 weeks with weekend and weekday batches available.

- Azure Data Factory course duration: 8 weeks (weekend/weekday batches).

- Training Location: Available in Hyderabad or online.

- Fees & Pricing: Affordable pricing. Contact us for a detailed fee structure.

- Free Training & Demo Sessions: Experience our teaching quality through Azure data training free demo sessions.

- We provide Azure DevOps training and many other cloud courses

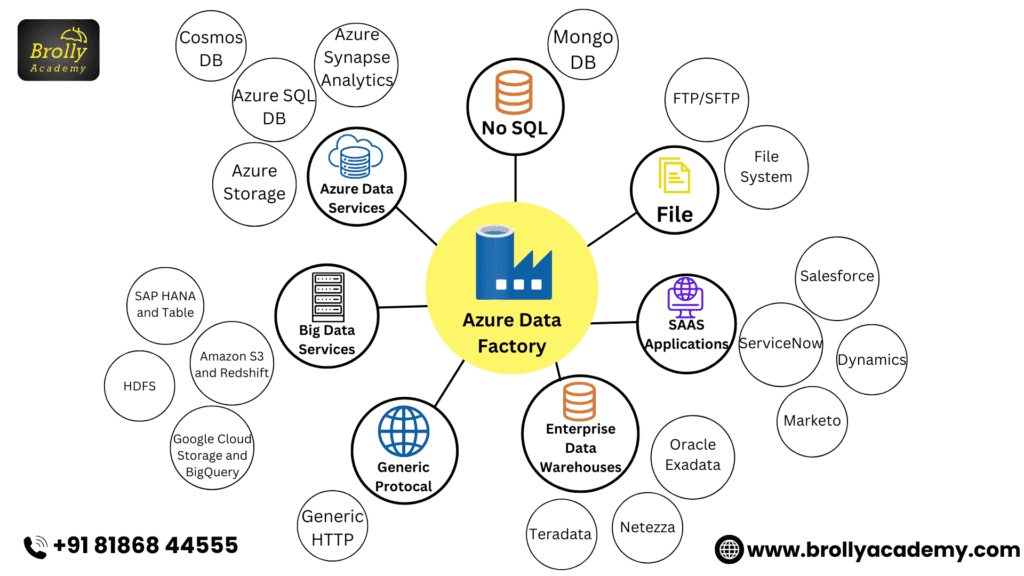

What is Azure Data Factory?

Azure Data Factory (ADF) is a fully managed service provided by Microsoft for data integration, movement, and transformation. It enables the automation of Extract, Transform, and Load (ETL) or Extract, Load, Transform (ELT) operations across cloud and on-premises data sources. ADF allows organizations to efficiently move and process data from various sources to destinations like data lakes, warehouses, or analytics platforms for use in analytics, machine learning, and business intelligence.

- Security and Compliance : Built-in security features such as encryption, role-based access, and compliance with standards like GDPR and HIPAA.

- Monitoring and Management : Built-in tools for tracking pipeline performance, setting alerts, and debugging issues through the Azure Portal.

Key Features of Azure Data Factory

- ETL and ELT : Extracts data from sources, transforms it with business logic or cleansing, and loads it to destinations such as data lakes or warehouses.

- Data Orchestration : Automates workflows and schedules data pipelines for movement and transformation.

- Hybrid Data Integration : Connects on-premises and cloud-based data sources, including databases, APIs, and file systems.

- Data Flow Transformation : A visual interface for building and transforming data pipelines with operations like joins, aggregations, and filters.

- Scalability On-demand scaling to handle large data volumes with minimal infrastructure management.

- Integration Runtime : Offers three types of runtime (Azure IR, Self-hosted IR, Azure SSIS IR) for flexible data movement across environments.

Where is Azure Data Factory Used?

What is the purpose of the azure data factory? Azure Data Factory (ADF) is used to automate data movement, transformation, and orchestration across various sources and destinations. It is widely employed in large-scale data workflows, ETL processes, and integration tasks. Here are its primary use cases:

- Data Migration

- Migrating legacy or on-premises data to the cloud, including Azure Synapse Analytics.

- ETL and Data Transformation

- Extracting, transforming, and loading data for analysis, including complex transformations like joins, filters, and cleansing.

- Big Data Processing

- Ingesting structured, semi-structured, and unstructured data into Azure Data Lake Storage for big data analytics with tools like Azure Databricks.

- Real-Time Data Integration

- Integrating real-time data streams for immediate processing and insights using services like Event Hubs, IoT Hub, or Kafka.

- Data Warehousing

- Loading data into Azure Synapse Analytics for analytical processing and periodic data refreshes.

- Data Orchestration and Scheduling

- Automating and scheduling data workflows based on triggers or specific intervals.

- Business Intelligence:

- Integrating and transforming data for reporting and analytics in Power BI.

- Data Backup and Archival

- Automating data backups and long-term storage in the cloud for compliance and disaster recovery.

Azure Data Factory Training Hyderabad at Brolly Academy

Brolly Academy offers an Azure Data Factory course in Hyderabad to build essential data engineering skills. Our program includes azure data factory online training in Hyderabad, providing practical learning on data integration and pipeline management. Course highlights include:

Course highlights in Brolly Academy

- Introduction to Azure Data Factory:

- Learn the capabilities of ADF and how to navigate the Azure Portal for ETL processes.

- Data Storage & Management

- Master integration with Azure Data Lake, Blob Storage, and SQL Database, focusing on security and data retention.

- Data Integration

- Automate ETL pipelines, create complex data transformations, and monitor pipelines effectively.

- Real-time Data Processing

- Ingest and process real-time data from sources like Event Hubs, and integrate with Power BI for analytics.

- Big Data Analytics

- Orchestrate data flows with Azure Databricks for big data and machine learning.

- Security & Monitoring

- Implement security measures using Key Vault and RBAC, and monitor pipelines with Azure Monitor.

- Certification Preparation

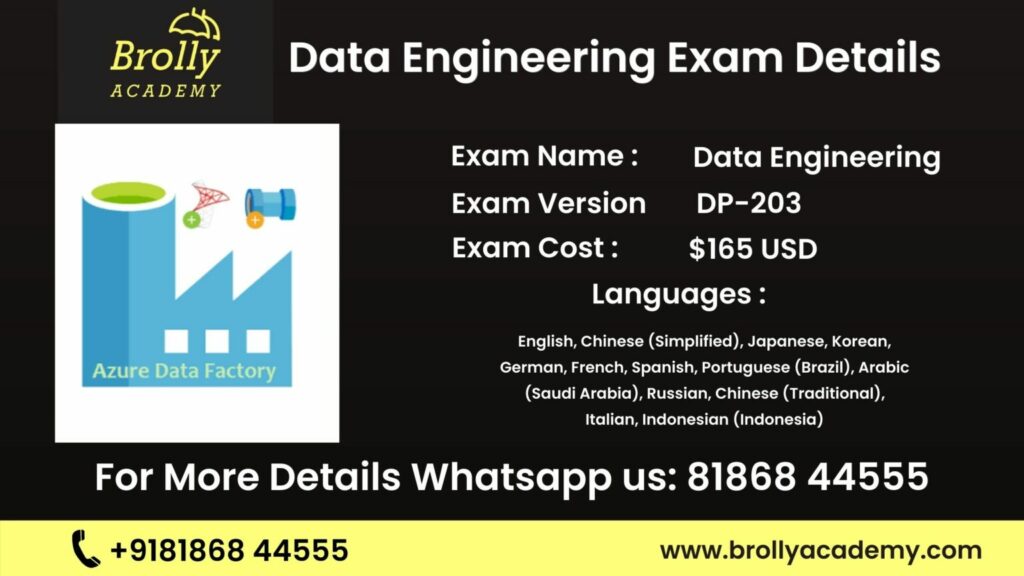

- Prepare for the DP-203 certification with hands-on labs and practice tests.

- Flexible Options

- Weekday/weekend batches, free demo sessions, and affordable pricing.

- How long it will take to learn Azure Data Factory: 6-8 weeks

Fees: Competitive pricing, with discounts available.

Modes of training

Modes of Azure Data Factory training in Hyderabad

Online Training

- Basic to advance level

- Daily recorded videos

- Live project included

- Whatsapp Group Access

- 100% Placement assistance

- Interview Guidance

Video Course

- Basic to advanced level

- Lifetime Video Access

- Doubt Clearing Session

- Whatsapp Group Access

- Course Material Dumps

- Interview Guidance

Corporate Training

- Basic to advanced level

- Daily recorded videos

- Live project included

- Doubt Clearing Sessions

- Material Dumps Access

- Whatsapp Group Access

Why Choose us

Azure Data Factory Training in Hyderabad

Experienced Trainers

Our training is led by certified Azure Data Factory experts with over 15 years of industry experience. Whether you are a beginner or an advanced professional looking to upskill, our trainers are passionate about helping you design, manage, and operate efficient data pipelines in Azure.

Hands-on Practical Lab Sessions

We focus on hands-on learning, ensuring you gain a deep understanding of both theory and practice. Our course is packed with extensive lab sessions to equip you with the practical skills needed to design, build, and manage scalable ETL pipelines and data integration solutions. You will gain real-world experience to tackle industry challenges with confidence.

Comprehensive Curriculum

Our Azure Data Factory course is meticulously updated by seasoned instructors to include the latest tools and techniques. The curriculum is structured with module assignments and quizzes to reinforce learning and track your progress, ensuring you master every aspect of ADF. and our Azure Data Factory training in Hyderabad fees Affordable

Unlimited Learning Management System (LMS) Access

Gain unlimited access to course materials, video recordings, and our robust learning management system (LMS). With lifetime access to all resources, you can revisit the content anytime to refresh your knowledge or practice your skills.

Job Placement Assistance

Benefit from our dedicated placement support, which includes access to top interview questions, mock interviews, resume assistance, and interview coaching. We prepare you to confidently pursue opportunities at leading companies, helping you navigate your career path in the Azure Data Factory domain.

Certification Preparation

Our comprehensive training prepares you for the Azure Data Factory certification exam with hands-on labs, real-world scenarios, and interactive exercises. We provide everything you need to gain the knowledge and confidence to earn your certification and accelerate your career.

Market trend

1. Global Market Trends (USD)

- Market Size for Cloud Data Integration:

- The global cloud data integration market is projected to reach $21.6 billion USD by 2025, growing at a CAGR of 13.8% from 2020-2025.

- Azure Data Factory is a significant player in this market, capturing a substantial share due to its scalability and flexibility for ETL processes.

- Azure Data Factory Training & Upskilling:

- With rising demand, training for Azure Data Factory has grown exponentially, with the market for cloud skills training in India projected to reach ₹4,500 crores by 2024.

- Certifications in Azure Data Factory are becoming more valuable, with salaries for certified ADF professionals in India ranging

- Adoption by Indian Enterprises:

- Over 35% of Indian enterprises are currently leveraging Azure Data Factory for cloud-based data integration, expected to grow to 50% by the end of 2024.

- The BFSI, healthcare, and retail sectors are leading adopters, with investments in ADF-based solutions reaching over ₹2,000 crores.

2.Market Trends in India (INR)

- Cloud Data Integration Market in India:

- The Indian cloud data integration market is expected to grow from ₹12,000 crores in 2023 to ₹19,500 crores by 2025, at a CAGR of 15.5%.

- Azure Data Factory’s demand in India has seen a sharp rise due to increased cloud adoption among IT and non-IT sectors.

- Azure Data Factory Training & Upskilling:

- With rising demand, training for Azure Data Factory has grown exponentially, with the market for cloud skills training in India projected to reach ₹4,500 crores by 2024.

3. Salary Trends for Azure Data Factory Professionals

- Globally (USD):

- Azure Data Engineers specializing in Data Factory can expect salaries ranging from $85,000 to $140,000 USD annually, depending on experience and location.

Azure data factory Training in Hyderabad

Testimonials

Certification

Azure Data Factory Certification Course in Hyderabad

- Job Oriented Modules Covered in Azure Data Factory Certification Training in Hyderabad:

- Exam Preparation: Prepare for the Azure Data Engineer Associate exam with focused training.

- Hands-on Experience: Gain practical skills using Azure Data Factory, ensuring readiness for the Azure Data Factory exam.

- Expert-Led Sessions: Learn from industry experts with a detailed, structured approach.

- Interactive Learning: Engage in industry-relevant projects and real-world scenarios.

- Certification-Ready: Complete the training with the confidence to pass the Azure Data Engineer certification.

- Recognized Certification: Earn a certification recognized by leading organizations.

- Career Growth: Build confidence and expertise, opening doors to Azure Data Engineering career opportunities.

Azure Data Factory certification cost

Skills Developed

After Azure Data Factory Training in Hyderabad

At Brolly Academy, our Azure Data Factory course in Hyderabad will teach you to build data pipelines, manage data integration, and use Azure services like Data Lake and Synapse Analytics. Our certification program ensures you gain practical skills for data engineering and cloud data management roles. Get ready for hands-on learning and industry-focused expertise

- Data Integration & ETL Processes

- Design and develop efficient ETL pipelines using ADF.

- Automate data movement, transformation, and scheduling.

- Data Storage Management

- Integrate ADF with Azure Data Lake, Blob Storage, and SQL databases.

- Design scalable, secure, and optimized storage solutions.

- Real-Time Data Processing

- Process real-time data using Azure Event Hubs and Stream Analytics.

- Monitor and resolve data flow errors to maintain system reliability.

- Data Security & Governance

- Implement RBAC, encryption, and secure data transfer.

- Use Azure Purview for data governance and asset management.

- Advanced Data Transformation

- Perform complex data transformations using ADF Data Flows.

- Design robust and efficient data pipelines for large-scale tasks.

- Performance Optimization

- Optimize pipeline performance with techniques like parallel processing.

- Monitor and manage costs with Azure Monitor.

- Reporting & Analytics Integration

- Connect ADF pipelines to Power BI for real-time reporting and dashboards.

- Process large datasets to drive business decisions.

- Hands-on Exposure

- Work on real-world projects and a comprehensive capstone project, covering data integration, transformation, deployment, and monitoring.

Tools covered azure data factory

Data Factory UI (Azure Portal)

Purpose: The primary interface for designing and managing data pipelines.

Purpose: A web-based authoring tool used to design and deploy data workflows.

Purpose: A scalable and secure data storage service that integrates with ADF for storing raw data.

Azure Blob Storage

Purpose: A simple and cost-effective cloud storage solution for storing unstructured data.

Azure Synapse Analytics (formerly Azure SQL Data Warehouse)

Purpose: A cloud-based analytics service that combines big data and data warehousing.

Azure SQL Database

Purpose: A fully managed relational database service in Azure for storing structured data.

Azure Databricks

Purpose: A collaborative Apache Spark-based analytics platform for big data processing.

Azure Machine Learning

Purpose: A service that helps build, train, and deploy machine learning models.

Linked Services

Purpose: Defines the connection information to various data sources and compute resources.

Datasets

Purpose: Defines data structures and locations within ADF pipelines.

Data Flows

Purpose: A visual tool for building complex data transformations.

Triggers

Purpose: Defines when a pipeline should run based on a schedule, event, or data arrival.

Monitoring and Management Tools

Purpose: Provides tools for monitoring pipeline performance, errors, and execution status.

Azure Logic Apps

Purpose: Workflow automation tool that integrates with Azure Data Factory for event-driven automation.

Pre - requisites

for Azure Data Factory course in Hyderabad

Basic Understanding of Cloud Computing

Familiarity with cloud platforms, particularly

- Microsoft Azure,

- will help you quickly grasp Azure Data Factory's cloud-based services.

Basic Knowledge of Databases

Basic understanding of relational and non-relational databases such as SQL Server, MySQL, or NoSQL databases like Cosmos DB.

Familiarity with SQL querying and data modeling concepts.

Understanding of ETL Processes

Basic knowledge of ETL (Extract, Transform, Load) processes is beneficial, as Azure Data Factory is heavily used for managing ETL workflows.

Familiarity with Programming (Optional)

Although Azure Data Factory offers a no-code, drag-and-drop interface, familiarity with programming languages like Python or SQL can be advantageous for building more complex data transformations and automation. Basic scripting knowledge for working with data flows or setting up triggers.

Basic Understanding of Data Warehousing Concepts

Understanding how data is organized, structured, and stored in data warehouses, such as Azure Synapse Analytics, is helpful in using Azure Data Factory effectively for data integration and movement.

Familiarity with Microsoft Azure

Familiarity with basic Azure services, like Azure Blob Storage, Azure SQL Database, and Azure Data Lake, will ease your learning experience.

Basic Knowledge of Data Integration and Orchestration

Knowledge of how data moves across different platforms and systems, and the general concept of orchestrating workflows, is helpful.

Interest in Data Engineering or Cloud-Based Data Solutions

Since the course is designed to train data engineers, an interest in pursuing a career in cloud data engineering or analytics will help you stay motivated and understand the real-world applications of Azure Data Factory.

Job opportunities for Azure Data Factory

Azure Data Engineer

Role: Design, implement, and manage data pipelines using Azure Data Factory to ingest, transform, and load data into cloud platforms like Azure SQL Data Warehouse, Azure Data Lake, or Azure Synapse.

Salary Range:

USD: $90,000 - $130,000 per year

INR: ₹7,00,000 - ₹10,00,000 per year

Cloud Data Engineer

Role: Build and optimize cloud-based data pipelines for large-scale data integration, migration, and analytics.

Salary Range:

USD: $85,000 - $120,000 per year

INR: ₹6,50,000 - ₹9,00,000 per year

ETL Developer

Role: Develop, implement, and manage ETL pipelines to transform and load data into Azure cloud storage and analytics systems.

Salary Range:

USD: $80,000 - $110,000 per year

INR: ₹6,00,000 - ₹8,50,000 per year

Azure Solutions Architect

Role: Design and implement end-to-end data integration solutions, leveraging Azure services including Data Factory for data orchestration and transformation.

Salary Range:

USD: $120,000 - $160,000 per year

INR: ₹9,50,000 - ₹12,50,000 per year

Big Data Engineer

Role : Work with large datasets and ensure seamless data integration and transformation across various platforms like Azure Data Lake and Azure Databricks.

Salary Range:

USD: $95,000 - $135,000 per year

INR: ₹7,50,000 - ₹11,00,000 per year

Data Integration Specialist

Role: Manage data movement between different systems (on-premises and cloud), creating integration solutions using Azure Data Factory.

Salary Range:

USD: $85,000 - $115,000 per year

INR: ₹6,50,000 - ₹9,50,000 per year

Business Intelligence (BI) Developer

Role : Create and manage data pipelines to support BI solutions, integrating data from different sources into platforms like Power BI.

Salary Range:

USD: $80,000 - $110,000 per year

INR: ₹6,00,000 - ₹8,50,000 per year

Azure Cloud Consultant

Role : Provide consultancy services to organizations for migrating their data infrastructure to Azure, utilizing ADF for automation and integration.

Salary Range:

USD: $100,000 - $140,000 per year

INR: ₹7,50,000 - ₹11,00,000 per year

Data Operations Engineer

Role : Maintain and monitor data pipelines, ensuring high performance, availability, and scalability.

Salary Range:

USD: $85,000 - $115,000 per year

INR: ₹6,50,000 - ₹9,50,000 per year

Machine Learning Engineer

Role : Use Azure Data Factory to integrate machine learning pipelines and ensure seamless data flow for training models and scoring.

Salary Range:

USD: $95,000 - $130,000 per year

INR: ₹7,50,000 - ₹10,50,000 per year

FAQ’s

What is Azure Data Factory?

Is Azure Data Factory easy to learn?

Is coding required for Azure Data Factory?

No, coding is not always required. ADF provides a visual interface for designing workflows, but if needed, you can use code to write custom scripts for more complex transformations.

How to create Azure Data Factory?

When to use Azure Data Factory?

What language does Azure Data Factory use?

Azure Data Factory certification cost?

Will I be provided with an Azure Data Factory syllabus at Brolly Academy?

How long is it going to take to complete this Azure Data Factory certification course?

What are the different types of Azure Data Factory learning paths at Brolly Academy?

Will I get a free demo on Azure Data training?

Will I get placement assistance from Brolly Academy on Azure Data training?

Is Azure Data Factory free?

What companies hire from azure data factory course

Tech Companies

Microsoft

AWS

IBM

Oracle

Financial Services

JPMorgan Chase

Goldman Sachs

Wells Fargo

Citi

HSBC

Healthcare

Pfizer

Johnson & Johnson

Merck

Roche

UnitedHealth Group

Retail

Walmart

Amazon

Target

Best Buy

Home Depot

Telecommunications

Verizon

AT&T

Vodafone

T-Mobile

Comcast