Structure of Artificial Intelligence

Introduction To Structure of Artificial Intelligence

Artificial Intelligence is no longer a technology reserved for science fiction or research labs. It’s woven into the way we live, work, and even think — whether it’s a doctor diagnosing illnesses with AI tools, a car navigating traffic without a driver, or a website predicting what you want to buy next.

As AI quietly becomes the backbone of modern life, understanding how it is structured — how it thinks, learns, and acts — has never been more important. Yet behind every AI application, no matter how simple or complex, lies a carefully structured framework.

Understanding the structure of Artificial Intelligence is critical for developers, researchers, business leaders, and even policymakers.

Without this understanding, it’s impossible to build AI systems that are scalable, reliable, ethical, and aligned with human goals.

What is the Structure of Artificial Intelligence?

AI is not a monolithic block. It is composed of multiple layers and components working together to create intelligent behavior. At its core, AI structure answers four fundamental questions:

- How does an AI perceive its environment?

- How does it reason and make decisions based on perceptions?

- How does it learn from experiences to improve future performance?

- How does it act on the environment to achieve goals?

Without a structured approach, AI systems would either fail to function effectively or behave unpredictably — both undesirable outcomes, especially in high-stakes industries like healthcare, aviation, or security.

Why Understanding AI Structure Matters

Many people treat AI as a “black box” — something that works, but nobody truly understands how.

This attitude is dangerous. Understanding AI’s internal structure is vital for several reasons:

- Better Design:

Engineers can optimize system performance, scalability, and efficiency when they deeply understand how different modules interact. - Ethical Responsibility:

Knowing how AI systems make decisions helps identify and correct bias, ensuring fairness and accountability. - Security and Control:

Structured systems are easier to monitor, debug, and protect from vulnerabilities or malicious exploitation. - Innovation:

New breakthroughs in AI (like explainable AI, federated learning, or neuromorphic computing) often emerge by rethinking or evolving existing structures. - Career Skills:

For students and tech professionals, understanding AI structure is now a fundamental requirement, not optional.

In short, the better you understand the inner workings of AI, the more control, innovation, and responsibility you can exercise over this transformative technology.

Core Concept: The AI System as an Agent

At the heart of AI structure lies the concept of the agent. An agent is an entity that:

- Perceives its environment through sensors

- Decides on actions based on perceptions and internal goals

- Acts upon the environment through actuators

Learns from feedback and outcomes

Think of an agent as the “brain” behind any AI system — whether it’s a recommendation algorithm suggesting movies, a robot vacuum navigating your living room, or an autonomous car adjusting speed on a highway.

What Makes an AI Agent "Intelligent"?

Not all agents are “intelligent.” A thermostat, for example, senses temperature and turns heating on/off — but it does not reason or learn. To qualify as intelligent, an agent must:

- Have goal-directed behavior

- Be capable of adapting to changes in the environment

- Make decisions based on incomplete or uncertain information

- Learn from experiences to improve future performance

The complexity of the agent’s internal structure defines how intelligent and capable the AI system becomes.

Major Layers in the Structure of Artificial Intelligence

|

Layer |

Purpose |

Examples |

|

Perception Layer |

Gather information from environment |

Cameras, Sensors, Data Streams |

|

Reasoning Layer |

Interpret data, infer context, plan actions |

Logical inference, Knowledge bases |

|

Learning Layer |

Adapt behavior based on outcomes |

Machine Learning algorithms |

|

Action Layer |

Perform actions to affect environment |

Robotic actuators, Output systems |

|

Component |

Description |

Real-World Example |

|

Sensors |

Gather raw data |

Camera in self-driving car |

|

Actuators |

Execute decisions physically |

Car steering system |

|

Processing Unit |

Analyze data, make decisions |

AI Model predicting next move |

|

Memory |

Store experiences, data history |

Experience replay in RL agents |

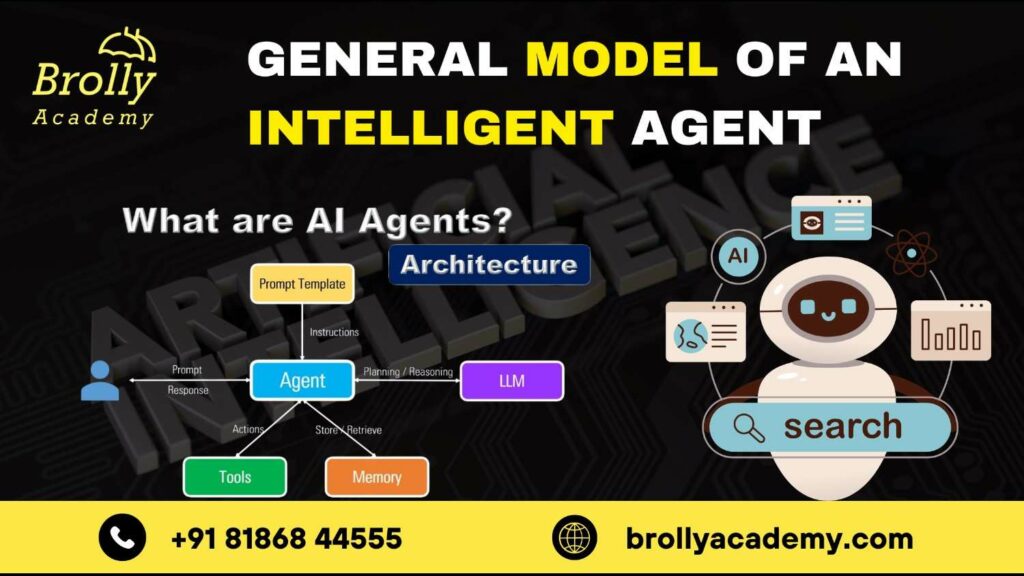

General Model of an Intelligent Agent

Most intelligent agents (whether software bots or physical robots) follow a generalized structure consisting of:

- Sensors:

To perceive the environment. - Percept Sequence:

Complete history of what the agent has perceived. - Agent Function:

Maps percept sequences to actions using some AI logic (simple reflex, model-based reasoning, goal-based planning, or utility-maximizing strategies). - Actuators: To carry out actions that affect the environment.

Deep Types of AI Agents and Their Roles

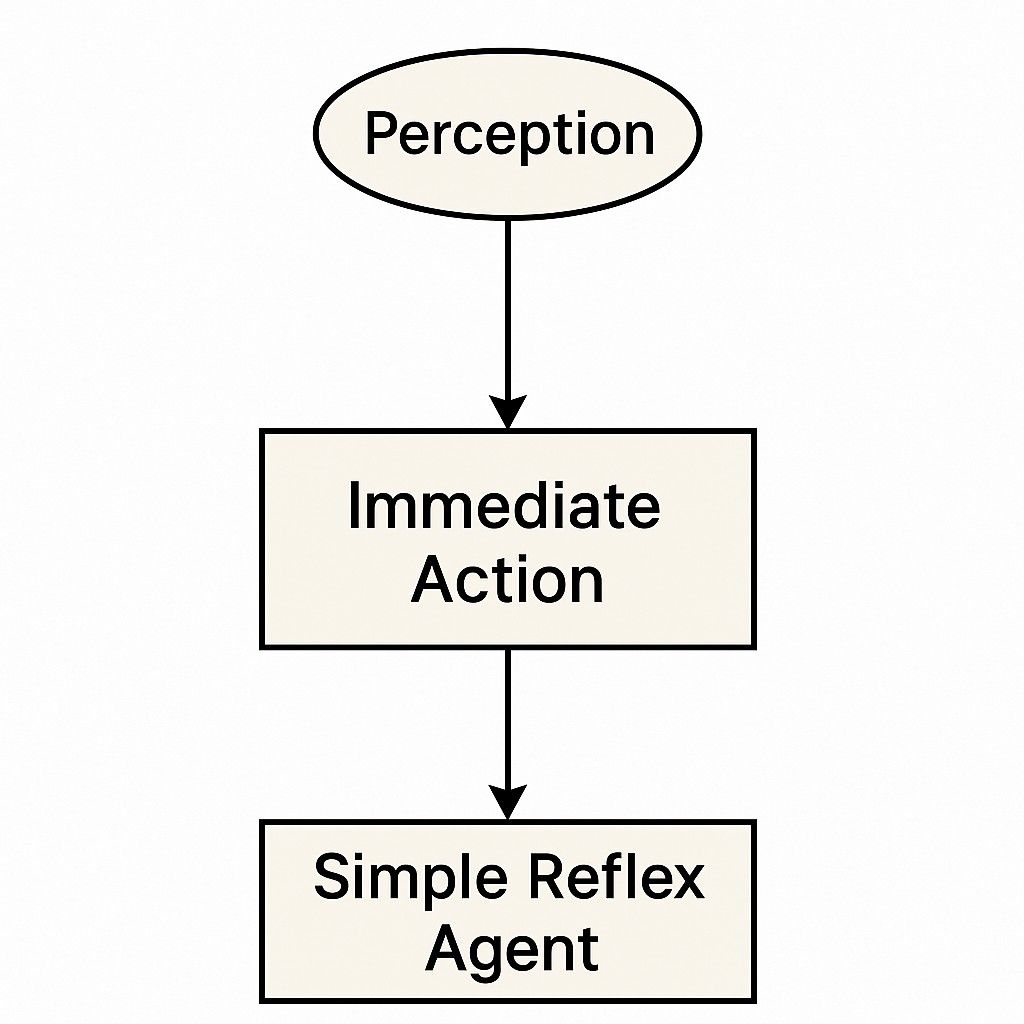

1. Simple Reflex Agents

These agents act only based on current perception, without considering history or predicting the future.

- They follow if-then rules:

“If the room is dark, turn on the light.” - No internal memory or learning.

- Very fast, but very limited intelligence.

Example : Automatic sliding doors that open when someone approaches.

2. Model-Based Reflex Agents

Model-based agents maintain an internal model of the world, meaning they understand how the environment works beyond the immediate perception.

- They remember past perceptions.

- They can handle partially observable environments.

- They predict the outcome of their actions.

Example : A robot vacuum that maps your home and remembers obstacles even if it cannot see them currently.

|

Feature |

Simple Reflex Agent |

Model-Based Reflex Agent |

|

Memory |

No |

Yes |

|

Prediction Ability |

No |

Yes |

|

Example |

Basic thermostat |

Robot vacuum (Roomba) |

3. Goal-Based Agents

Goal-based agents do not just react — they plan.

- They have explicit goals (e.g., “deliver pizza to Room 21”).

- They use search and decision-making algorithms to find the best actions.

- They evaluate different options before choosing the next move.

Example : A self-driving taxi calculating the fastest route to the passenger’s destination.

4. Utility-Based Agents

Utility-based agents are even smarter — they aim not just to achieve goals, but to achieve them optimally.

- They consider multiple factors (speed, safety, comfort).

- They use utility functions to rate different outcomes.

- They seek the highest utility rather than just reaching any goal.

Example : An e-commerce AI recommending products that maximize both customer satisfaction and company profit.

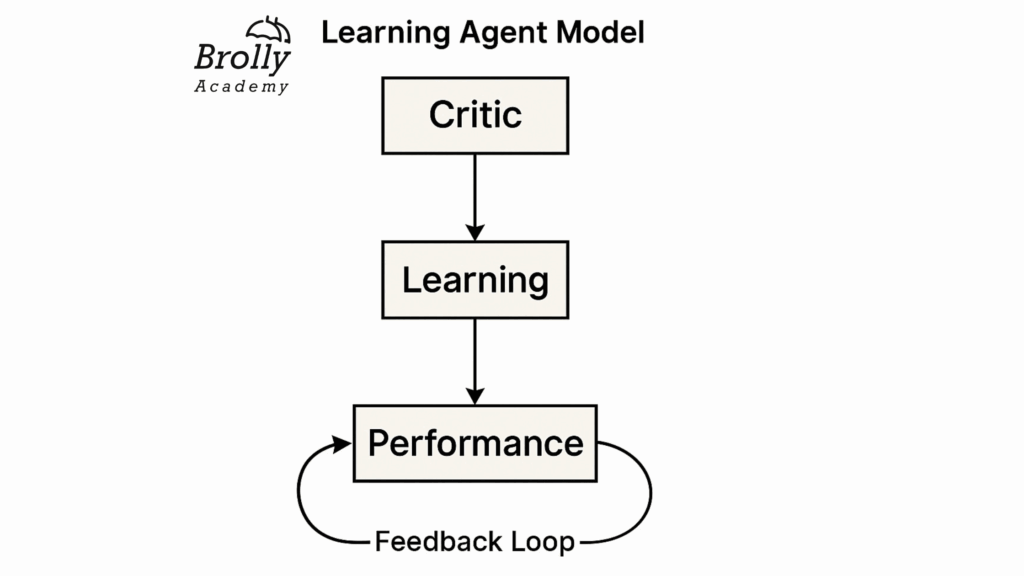

5. Learning Agents

Learning agents improve their performance over time based on feedback.

- They have a performance element (core decision-making) and a learning element (improvement engine).

- They adjust strategies based on trial and error.

- They can handle new, unknown environments better than hard-coded agents.

Example : An AI personal assistant that becomes better at predicting your preferences the more you use it.

Branches of AI and How They Fit into the Structure

1. Machine Learning (ML)

Where it fits:

- Learning Layer

- Decision-Making Layer

ML is about enabling machines to learn patterns from data rather than being explicitly programmed. Subfields:

- Supervised Learning (predicting outcomes)

- Unsupervised Learning (finding hidden patterns)

- Reinforcement Learning (learning from rewards)

Example : Spam detection in emails.

2. Natural Language Processing (NLP)

Where it fits:

- Perception Layer (language input)

- Reasoning Layer (understanding meaning)

NLP allows machines to understand, interpret, and generate human language.

Example : Chatbots answering customer queries.

3. Robotics

Where it fits:

- Perception (through sensors)

- Action (through actuators)

Robotics combines AI with mechanical engineering to build physical agents that perceive and act in the physical world.

Example : Warehouse robots sorting packages.

4. Computer Vision

Where it fits:

- Perception Layer

Computer vision enables machines to see and interpret visual information from the world.

Example : Face recognition in smartphones.

5. Expert Systems

Where it fits:

- Reasoning Layer

Expert systems use pre-defined rules and knowledge bases to make decisions like human experts.

Example : Medical diagnosis support systems.

|

Branch of AI |

Core Function |

Example |

|

Machine Learning |

Learning from Data |

Product Recommendations |

|

NLP |

Language Understanding |

Virtual Assistants |

|

Robotics |

Physical Interaction |

Delivery Drones |

|

Computer Vision |

Visual Perception |

Autonomous Vehicles |

|

Expert Systems |

Decision-Making Rules |

Diagnostic Tools |

Core Functional Areas of AI Structure

Regardless of the specific branch, agent type, or application, every Artificial Intelligence system ultimately revolves around four core functional areas.

These fundamental pillars — sensing, thinking, acting, and adapting — define what makes a system truly intelligent, rather than just reactive or automated.

1. Sensing

Sensing is the very first step of intelligence. It enables AI systems to gather raw data from the environment, which forms the foundation for all higher-level operations such as reasoning and learning.

Role : To perceive the external environment accurately through various types of sensors or data streams.

How It Works:

- Visual Sensing: Cameras and computer vision systems detect images, faces, movements, or objects.

- Auditory Sensing: Microphones capture sound for tasks like voice recognition or environmental monitoring.

- Textual Sensing: Crawlers or APIs extract text-based information from online sources, databases, or communication platforms.

- Physical Sensing: Sensors like LiDAR, radar, and accelerometers detect distances, speeds, and positions.

Example : Drone navigation systems employ camera sensors and LiDAR to detect obstacles such as trees, poles, and buildings, allowing real-time adjustments to their flight paths.

✅ Key Insight : Without robust and accurate sensing, AI cannot build a reliable model of the world — making perception failures one of the biggest risks in critical AI applications.

2. Thinking (Reasoning)

Once sensory information is collected and processed, the next functional area involves thinking or reasoning.

This layer transforms raw perceptions into meaningful inferences, strategies, and plans.

Role : To process input data, infer conclusions, predict future states, and choose actions that best achieve predefined goals.

How It Works:

- Logical Inference: Deductive reasoning based on known rules and facts.

- Probabilistic Reasoning: Making decisions under uncertainty using models like Bayesian networks.

- Pattern Detection: Recognizing trends, anomalies, or associations across datasets.

- Strategic Planning: Building sequences of actions that maximize goal achievement over time.

Example : Financial AI systems analyze stock market trends by detecting hidden patterns in historical data, forecasting future market movements, and advising investors on optimal portfolio strategies.

✅ Key Insight : Intelligent thinking enables AI not just to react to current conditions but to anticipate future outcomes and optimize decisions proactively.

3. Acting

Intelligence is meaningless if it cannot manifest into real-world outcomes. The acting function ensures that the AI agent intervenes in the environment, either physically or digitally, to execute decisions.

Role : To translate processed information and reasoning outputs into tangible actions that impact the environment or users.

How It Works:

- Physical Actions: Movement of robots, mechanical assembly, navigation by drones or autonomous vehicles.

- Digital Actions: Sending emails, adjusting thermostat settings, executing financial transactions, updating databases.

- Communication Actions: Generating natural language responses, visual outputs, or other forms of interaction with users.

Example : Industrial robotic arms equipped with AI pick up parts from conveyor belts, align them precisely, and assemble them into complex structures like car engines or circuit boards with high efficiency and precision.

✅ Key Insight : Without effective action, even the most advanced AI reasoning is wasted; success in AI is ultimately measured by meaningful, reliable outputs in the world.

4. Adapting (Learning)

Perhaps the most revolutionary aspect of AI compared to traditional automation is its ability to adapt and improve over time. The learning function allows AI systems to refine behaviors, strategies, and models based on past experiences and new incoming data.

Role : To update internal models, correct mistakes, refine performance, and handle novel situations without human reprogramming.

How It Works:

- Supervised Learning: Training models on labeled datasets to perform specific predictions.

- Reinforcement Learning: Learning optimal behaviors through trial-and-error and reward-based feedback.

- Unsupervised Learning: Discovering hidden structures in unlabeled data, like clustering users into behavior-based segments.

- Self-Supervised Learning: Using parts of data to predict other parts without explicit human labels.

Example : Speech recognition systems, such as those used in mobile assistants, continuously learn from user interactions. Over time, they adapt to individual accents, speech patterns, and vocabulary preferences, delivering more accurate results personalized to each user.

✅ Key Insight :

Learning and adaptation distinguish modern AI from static systems — enabling resilience, personalization, and innovation as environments and expectations evolve.

Architecture of AI Systems

- While agents and branches provide the fundamental building blocks of Artificial Intelligence, it is the architecture that defines how these components are organized and integrated into cohesive, functional systems

- Think of AI architecture as the blueprint of an intelligent brain — determining how information flows, how decisions are made, how learning occurs, and how actions are taken in the real world.

- Without well-designed architecture, even the most powerful AI algorithms would operate chaotically, failing to produce coherent or reliable results.

- A strong architectural framework ensures that sensing, interpreting, reasoning, adapting, and acting happen seamlessly, making the AI agent capable of intelligent and autonomous behavior.

Key Layers in a Standard AI Architecture

Regardless of the complexity or domain, most AI systems are structured into several interconnected layers, each handling a specific aspect of the intelligent process. Here’s a breakdown of the key layers that form the backbone of most modern AI architectures:

1. Perception Layer

The perception layer serves as the AI system’s eyes and ears, responsible for sensing the environment and collecting raw data that will feed into higher layers.

Role : Capture diverse inputs from the environment to build an understanding of the external world.

Typical Inputs:

- Visual data (camera images, video feeds)

- Audio signals (speech, environmental sounds)

- Text data (written content, social media feeds)

- Sensor readings (temperature, pressure, proximity)

Example : A drone’s onboard cameras continuously feed live images to its central processing unit, enabling it to “see” obstacles and navigation markers in real-time.

✅ Key Insight : The richness and accuracy of an AI’s perception determine the quality of all subsequent reasoning and actions.

2. Processing Layer (Interpretation)

Once raw data is collected, the processing layer interprets and organizes this information, transforming sensory inputs into meaningful features and representations.

Role : Analyze and structure incoming data into formats usable by decision-making modules.

Typical Processes:

- Feature extraction (identifying edges, shapes, objects)

- Semantic understanding (natural language parsing, sentiment detection)

- Scene analysis (understanding spatial layouts, object relationships)

Example : A self-driving car’s processing system identifies a stop sign from camera footage by detecting its octagonal shape, red color, and placement alongside the road.

✅ Key Insight : The efficiency of the processing layer directly influences how quickly and accurately the AI can reason about its environment.

3. Reasoning and Decision Layer

The reasoning and decision layer is the “thinking” part of the AI, where processed information is evaluated, logical conclusions are drawn, and strategic plans are formulated.

Role : Generate informed decisions based on interpreted data, goals, and constraints.

Typical Processes:

- Logical inference (deductive reasoning, rule-based systems)

- Probabilistic reasoning (Bayesian networks, uncertainty management)

- Planning and strategy (multi-step goal-oriented action planning)

Example : Upon recognizing a stop sign ahead, a self-driving vehicle’s decision layer calculates braking distance, assesses surrounding traffic, and plans the best approach to stop safely without abruptness.

✅ Key Insight : Effective reasoning ensures that AI actions are not random or purely reactive but are strategic, purpose-driven, and contextually aware.

4. Learning Layer (Adaptive Intelligence)

Unlike traditional software, AI systems have the unique ability to improve over time.

The learning layer enables AI agents to adapt based on new data, experiences, successes, and failures.

Role : Enhance decision-making and performance by extracting lessons from interactions with the environment.

Typical Processes:

- Supervised learning (training from labeled examples)

- Reinforcement learning (learning optimal actions through trial and reward)

- Self-supervised and unsupervised learning (discovering patterns without explicit labels)

Example : A robotic vacuum cleaner learns the layout of your home after several cleaning sessions, optimizing its path to clean faster and avoid obstacles more efficiently with each run.

✅ Key Insight : The learning layer transforms AI from static, pre-programmed systems into dynamic, evolving entities capable of continuous improvement.

5. Action Layer (Execution)

The final output of the AI system is manifested through the action layer, which translates decisions into real-world effects.

Role : Implement decisions physically or digitally to achieve system goals.

Typical Outputs:

- Mechanical movements (robotic arms, drone navigation)

- System updates (database entries, alerts, emails)

- Communication (text responses, voice outputs)

Example : On an automotive assembly line, a robotic arm equipped with AI guidance precisely picks up car parts and assembles them with speed and accuracy, dynamically adjusting to variations in component size or placement.

✅ Key Insight : The effectiveness of the action layer determines the tangible success of an AI system — no matter how brilliant the reasoning if actions fail, the system fails.

Typical AI Architectures in Use Today

|

AI Architecture Type |

Best Use Cases |

Example |

|

Reactive Architectures |

Reflex-like, real-time decision making |

Collision avoidance systems |

|

Deliberative Architectures |

Heavy planning and goal-driven tasks |

Chess-playing AI like AlphaZero |

|

Hybrid Architectures |

Combining fast reflexes with deeper reasoning |

Autonomous vehicles (e.g., Tesla Autopilot) |

|

Layered Architectures |

Scalable, modular systems handling complex workflows |

Virtual personal assistants like Siri and Alexa |

Reactive Architectures

Reactive AI systems operate purely based on the current perception of their environment, without maintaining any internal memory or history of past states. They are designed to respond immediately to stimuli, making them ideal for applications where speed is critical and deep reasoning is unnecessary.

Key Characteristics:

- No model of the world

- Immediate action-response cycles

- Fast, lightweight, and often deterministic behavior

Example : Collision avoidance systems in autonomous vehicles or robotic arms react instantly to proximity sensor readings, preventing accidents without engaging in complex reasoning processes.

Deliberative Architectures

Deliberative AI Architectures emphasize planning, reasoning, and decision-making based on an internal model of the environment. These systems consider multiple possible future states, evaluate potential outcomes, and choose the action sequence that best achieves a defined goal.

Key Characteristics:

- Detailed internal world models

- Explicit goal formulation and planning

- Longer decision times but better strategic foresight

Example : DeepMind’s AlphaZero, a revolutionary chess and Go playing AI, uses deliberative planning techniques like Monte Carlo Tree Search (MCTS) to simulate many possible game states before deciding on the best move.

Hybrid Architectures

Most real-world environments are too complex for pure reactive or pure deliberative systems to handle effectively.

Hybrid AI architectures blend the strengths of both reactive and deliberative approaches, allowing systems to react quickly to immediate threats while still planning strategically for future objectives.

Key Characteristics:

- Layered reactive modules for real-time responsiveness

- Strategic planners operating on broader time scales

- Balance between speed and intelligence

Example : Autonomous vehicles like Tesla’s Autopilot system use hybrid architectures:

- Reactive modules instantly apply emergency braking if a pedestrian is detected.

- Deliberative modules plan routes and adjust speeds for fuel efficiency and traffic flow optimization.

Layered Architectures

As AI systems grow more complex, layered architectures offer a modular approach to structure them.

In layered designs, different functional units are stacked, each handling a distinct aspect of perception, reasoning, learning, or action.

Key Characteristics:

- Separation of concerns: different layers for different tasks

- Modular upgrades and debugging

- Easier scaling and adaptability

Example : Virtual assistants like Siri, Google Assistant, and Alexa rely on layered architectures:

- Bottom layers handle raw speech input through acoustic modeling.

- Middle layers interpret linguistic meaning using NLP.

Upper layers make decisions based on context and generate spoken responses.

Each layer can be improved independently without disrupting the entire system.

AI in Real-World Applications

1. Self-Driving Cars

Self-driving vehicles represent one of the most complex real-world applications of AI, relying on a highly integrated structure that mirrors human perception, reasoning, and action.

Structure Breakdown:

- Perception: Self-driving cars utilize a combination of cameras, LiDAR sensors, GPS data, radar, and ultrasonic sensors to continuously perceive their surroundings, detecting other vehicles, pedestrians, traffic signals, and road conditions.

- Reasoning: AI decision engines predict the movements of other road users, assess risks, and plan optimal routes and maneuvers in real time.

- Learning: Deep learning models continuously refine driving behavior by learning from millions of miles of real-world and simulated driving experience, adapting to new scenarios like construction zones or unusual traffic patterns.

- Acting: Physical actuators control the vehicle’s braking, acceleration, and steering systems based on AI-generated commands.

Example : Tesla’s Full Self-Driving (FSD) system integrates computer vision, deep learning networks, complex decision trees, and reinforcement learning to navigate roads autonomously under human supervision. FSD’s architecture showcases the necessity of seamless perception-action loops and real-time decision-making in safety-critical environments.

2. Chatbots and Virtual Assistants

Conversational AI systems, like chatbots and virtual assistants, are structured to simulate human-like interactions across text, voice, and sometimes even video.

Structure Breakdown:

- Perception: Inputs are received in the form of typed text, spoken language, or even emotional tone cues.

- Processing: Natural Language Processing (NLP) engines parse the input to understand syntax, semantics, and user intent.

- Reasoning: Based on the extracted intent, the AI system maps queries to predefined responses, dynamic content generation models, or database retrievals.

- Learning: Chatbots and assistants improve their conversational abilities over time by analyzing user interactions, adjusting dialogue flows, and refining understanding of colloquial expressions and idioms.

Example : Systems like ChatGPT, Google Assistant, and Amazon Alexa continuously refine their suggestions and interactions based on prior user behaviors, delivering increasingly personalized assistance in daily tasks, from setting reminders to providing weather updates.

3. Recommendation Systems

Recommendation engines are among the most commercially successful applications of AI structures, designed to personalize user experiences across e-commerce, entertainment, news, and social media platforms.

Structure Breakdown:

- Perception: AI systems gather vast amounts of user data, including browsing history, click behavior, purchase patterns, and demographic profiles.

- Reasoning: Collaborative filtering algorithms predict user preferences based on similarities with other users, while content-based filtering recommends items similar to previously interacted content.

- Acting: The system delivers highly targeted product, content, or service suggestions tailored to the individual user’s tastes.

Example : Netflix’s recommendation engine analyzes what you’ve watched, how you rated past content, and what similar users enjoyed to offer personalized suggestions — increasing engagement and customer retention through optimized AI structures.

4. Industrial Robots

In modern factories and warehouses, AI-driven industrial robots have transformed manufacturing, logistics, and material handling processes.

Structure Breakdown:

- Sensors: Robots are equipped with vision systems, pressure sensors, proximity detectors, and environmental scanners to perceive their workspaces accurately.

- Reasoning: AI algorithms process sensory data to identify objects, plan efficient movements, and avoid collisions or errors.

- Acting: Robots perform tasks such as picking, placing, welding, and assembling parts, often collaborating safely with human workers (cobots).

Example : Amazon warehouse robots leverage computer vision, machine learning, and dynamic path planning algorithms to sort, transport, and manage inventory at incredible speed and accuracy, revolutionizing fulfillment center operations.

Emerging Structures in Artificial Intelligence

The field of Artificial Intelligence is in a state of constant evolution. As researchers push the boundaries of what AI systems can achieve, traditional monolithic or centralized architectures are being replaced by more dynamic, adaptive, and collaborative designs. New emerging structures are solving the limitations of earlier models, making AI more powerful, resilient, and aligned with real-world complexity. Here are some of the most significant emerging AI structures shaping the future:

1. Multi-Agent Systems (MAS)

Instead of designing AI as a single monolithic entity, Multi-Agent Systems (MAS) focus on creating multiple intelligent agents that interact within a shared environment. Each agent has its own perceptions, knowledge base, goals, and abilities, but they are structured to collaborate, negotiate, or even compete to achieve broader system objectives.

Key Features:

- Knowledge Sharing: Agents can exchange information to create a richer understanding of their environment.

- Distributed Decision-Making: No single agent controls the system; decisions emerge from agent interactions.

- Coordination and Negotiation: Agents work together (or against each other) to complete tasks or allocate resources efficiently.

- Scalability and Resilience: The system can scale better and adapt if individual agents fail or leave.

Applications:

- Swarms of autonomous drones conducting search-and-rescue operations without central control.

- Stock trading bots operating in competitive financial markets, adjusting strategies dynamically.

✅ Bullet Points:

- Collaboration between multiple AI agents

- Distributed intelligence without central authority

- Greater system robustness and adaptability

- Suitable for decentralized, real-world complex tasks

2. Decentralized AI Architectures

Traditional AI deployments rely heavily on centralized cloud servers for data processing and decision-making.

However, as concerns around privacy, latency, and single points of failure grow, the trend is shifting towards decentralized AI architectures.

Key Features:

- Edge Computing: AI models run directly on user devices (smartphones, IoT devices) rather than sending data to central servers.

- Privacy Protection: Sensitive personal data stays local, reducing exposure risks.

- Faster Response Times: Local computation reduces the delays caused by network transmission.

- Enhanced Reliability: The system continues functioning even if parts of the network fail.

Applications:

- Federated Learning — pioneered by Google — allows AI models to be trained across millions of smartphones without transferring raw user data, preserving privacy while improving the model.

✅ Bullet Points:

- Processing happens at the device (“on the edge”), not in the cloud

- Enhances user privacy and data security

- Reduces latency and improves real-time responsiveness

- Makes systems more resilient against network failures

3. Neuromorphic Computing

Neuromorphic computing represents a revolutionary approach to AI hardware and architecture design.

Rather than using traditional CPU/GPU architectures, neuromorphic chips are designed to mimic the structure and function of the human brain, specifically biological neurons and synapses.

Key Features:

- Massive Parallelism: Billions of operations can occur simultaneously, just like neurons firing across a brain.

- Ultra-Low Energy Consumption: Neuromorphic systems are far more energy-efficient than conventional computers, enabling continuous learning on devices.

- Dynamic Learning: Instead of static model updates, neuromorphic systems learn adaptively through real-time synaptic adjustments.

Applications:

- Intel’s Loihi chip, a leading neuromorphic processor, is designed to accelerate AI learning processes while consuming minimal power — making it ideal for robotics, real-time pattern recognition, and energy-constrained environments.

✅ Bullet Points:

- Brain-inspired AI architectures mimicking neurons and synapses

- Massive parallel processing for faster, smarter learning

- Energy-efficient operation enabling portable, always-on AI

- Potential for creating AI that learns and adapts like living organisms

Challenges in Structuring AI Systems

Despite tremendous advances in Artificial Intelligence, designing structured, scalable, and ethical AI systems remains a profound technical and societal challenge. Building an AI agent that simply performs a task is relatively straightforward today. However, constructing AI systems that are transparent, adaptable, fair, secure, and sustainable — especially at large scales — exposes deep complexities that researchers and engineers continue to grapple with. Here are some of the major challenges currently facing the structuring of AI systems:

Bias and Fairness

- One of the most persistent challenges in AI development is bias in decision-making. AI models are only as unbiased as the data they are trained on, and most real-world datasets reflect historical inequalities, human prejudices, or cultural blind spots.

- Without careful design, AI systems can amplify systemic biases rather than eliminating them, leading to unfair outcomes in critical areas like hiring, lending, policing, and healthcare.

- Structuring AI to be truly fair involves integrating fairness objectives into the system’s learning process, continuously auditing model outputs, and ensuring diversity in training data. It is not enough to focus on technical performance alone; fairness must become a first-class citizen in AI architecture.

Interpretability

- As AI systems grow more complex, particularly with the rise of deep neural networks, understanding why a model made a certain decision becomes harder.

- Interpretability — the ability to explain and justify outputs in a human-understandable way — is crucial for building trust, especially in high-stakes applications like medical diagnosis, judicial sentencing, or autonomous driving.

- However, many modern AI architectures function as “black boxes,” offering high predictive accuracy but little insight into their reasoning processes.

- Structuring AI for better interpretability involves techniques like attention visualization, feature attribution methods, surrogate modeling, and model simplification, enabling stakeholders to trust and verify AI behavior.

Scalability

- Initial AI prototypes often work well in controlled environments, but scaling AI systems to real-world complexity introduces enormous difficulties.

- Complex AI architectures become harder to maintain, monitor, and evolve as they interact with messy, unpredictable environments. Issues such as computational costs, data storage demands, communication bottlenecks, and model drift over time challenge the sustainability of large AI systems. Proper modular design, efficient data pipelines, scalable cloud architectures, and continuous retraining mechanisms are necessary to ensure that AI systems grow without collapsing under their own weight.

Ethical Constraints

- AI systems today wield significant influence over economic outcomes, personal freedoms, and even life-and-death situations.

- Yet ensuring that AI agents act ethically remains an unresolved frontier. Many existing AI models are optimized purely for utility maximization — accuracy, profit, speed — without explicit regard for moral boundaries. Structuring AI systems to incorporate ethical reasoning requires embedding normative principles (like human rights, fairness, harm minimization) directly into decision policies.

- It also demands mechanisms for value alignment, rule enforcement, and human oversight to ensure that AI actions remain within acceptable ethical frameworks, even when facing unfamiliar dilemmas.

Data Privacy

- The growing dependence on massive datasets to train AI models has raised urgent concerns about user privacy, data security, and consent.

- As AI moves towards decentralized structures like federated learning and edge computing, ensuring secure, encrypted communication between nodes becomes paramount.

- Moreover, AI must be structured to respect user consent dynamically, adapting data usage according to shifting legal, ethical, and individual boundaries.

- Privacy-preserving techniques such as differential privacy, homomorphic encryption, and secure multi-party computation must be deeply integrated into AI system architectures to prevent exploitation and unauthorized surveillance.

|

Challenge |

Why It Matters |

Potential Solutions |

|

Bias and Fairness |

Biased AI worsens inequality |

Diverse data, fairness-aware learning algorithms, bias audits |

|

Interpretability |

Lack of trust and accountability |

Explainable AI (XAI), model visualization, feature attribution |

|

Scalability |

Systems become unstable at scale |

Modular architectures, cloud-native design, automated monitoring |

|

Ethical Constraints |

Risk of harmful or unjust actions |

Ethics-by-design frameworks, rule-based safety layers, human-in-the-loop governance |

|

Data Privacy |

Breach of user trust and rights |

Federated learning, encryption, differential privacy techniques |

Future Evolution of AI Structure (2030 and Beyond)

- Artificial Intelligence, though advancing rapidly, is still in its early developmental stages compared to the complexity of biological intelligence. While today’s AI systems are capable of performing impressive specialized tasks, they are mostly modular, brittle, and context-limited.

- By 2030 and beyond, experts predict that the structure of AI will undergo a transformative evolution, becoming significantly more dynamic, decentralized, adaptive, and human-like in how it perceives, reasons, acts, and learns.

The following are some of the most critical ways AI architecture is expected to evolve over the coming decade:

Fully Integrated Perception-Action Loops

- Today, AI systems often divide their functionality into separate modules: sensing, interpreting, deciding, and acting are treated as distinct stages.

- In contrast, future AI systems are expected to develop seamless, fully integrated perception-action loops, where sensing and acting occur continuously and in real-time, closely mirroring the way biological organisms respond to their environments.

- Rather than processing sensor data passively and then deciding on actions afterward, AI agents of 2030 may engage in constant feedback cycles, where perception dynamically informs action, and action instantly alters perception, creating an ongoing, self-correcting system.

- For instance, a future autonomous robot would not simply stop and reason after seeing an obstacle but would instantaneously adjust its path and behavior fluidly, just as living beings naturally do.

Real-Time Adaptive Learning

- One of the limitations of current AI models is that they often require extensive retraining offline to incorporate new knowledge or skills.

- Updates involve costly, time-consuming processes using large datasets. Future AI structures aim to break this bottleneck by enabling real-time adaptive learning — the ability to learn and evolve directly within live environments.

- Systems will exhibit continuous lifelong learning, absorbing lessons from daily interactions without needing complete retraining cycles.

- They will perform instant adjustments to novel circumstances without erasing previously learned abilities, preserving accumulated knowledge even as they expand their skillsets.

- Furthermore, self-supervised learning will become standard practice, where AI models generate their own training signals from unlabeled data, minimizing dependence on human-annotated datasets.

- Imagine autonomous drones in 2030 seamlessly recalibrating their flight patterns in response to sudden weather changes, without engineers needing to update their algorithms manually.

Emotional and Social Intelligence Layers

- Intelligence is not solely about logical reasoning; it is deeply intertwined with emotion and social understanding.

- Current AI systems largely lack this dimension, operating on purely cognitive tasks without genuine emotional awareness. However, by 2030, AI architectures are expected to integrate emotional and social intelligence layers that allow machines to recognize, interpret, and respond appropriately to human emotions.

- Advanced models will be capable of emotion recognition by analyzing voice tones, facial expressions, text nuances, and even physiological signals.

- Decision-making processes will factor in emotional context, tailoring interactions based on empathy rather than cold logic.

- For example, future healthcare AIs might detect anxiety in a patient’s speech patterns and modify their communication approach to offer reassurance and build trust, greatly enhancing patient outcomes and user satisfaction.

AI Governance and Ethical Frameworks Embedded in Structure

- As AI systems become more powerful and autonomous, the stakes associated with their decisions increase dramatically.

- Instead of treating ethics as an afterthought or a separate monitoring module, future AI structures will embed governance and ethical reasoning directly into the decision-making core.

- This evolution means that AI agents will not just maximize utility or efficiency; they will also evaluate the ethical implications of their actions as a fundamental part of their logic.

- Every significant decision could be automatically checked against pre-defined ethical frameworks, laws, and human rights guidelines. Furthermore, AI systems will be capable of transparent reporting, documenting and explaining their decision-making paths for human audits and oversight.

- For example, autonomous military systems would be structured to autonomously recognize when a planned action might violate international humanitarian laws and abort such actions independently without awaiting external commands.

Case Study: How DeepMind Structured AI to Solve Complex Challenges

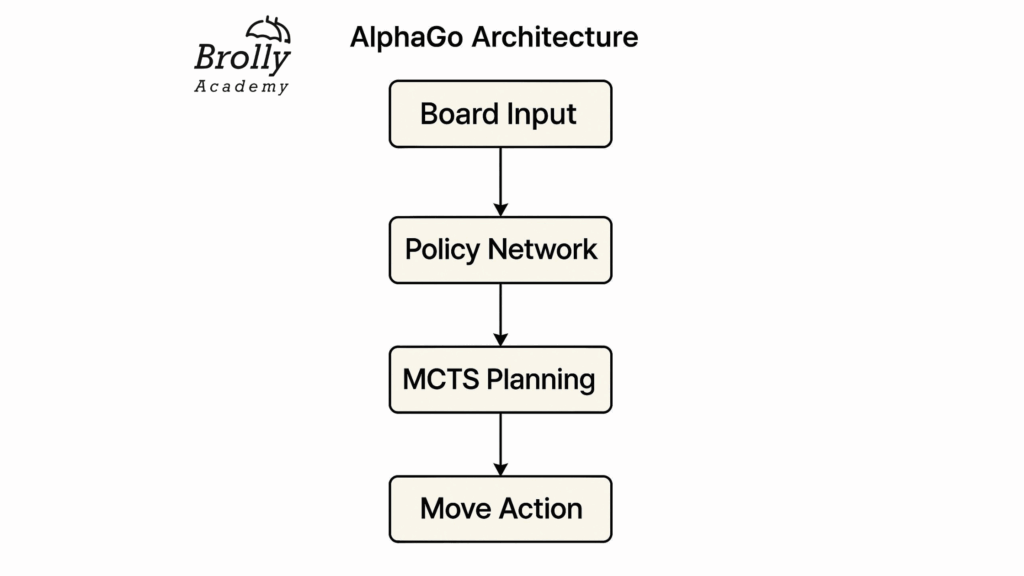

AlphaGo: Structured Intelligence to Defeat World Champions

- The challenge DeepMind set for itself with AlphaGo was unprecedented: defeat professional human players at the ancient board game Go.

- Go is significantly more complex than chess, with more possible board states than atoms in the observable universe.

- Traditional brute-force search methods were computationally impossible. Winning at Go required creativity, intuition, and long-term strategic thinking — skills once thought uniquely human.

Structural Approach Behind AlphaGo

|

Component |

How It Was Used |

|

Perception Layer |

Analyzed the current board state through deep neural networks trained on millions of professional and amateur Go games. |

|

Reasoning Layer |

Predicted future board configurations using Monte Carlo Tree Search (MCTS), simulating thousands of potential move sequences. |

|

Learning Layer |

Employed reinforcement learning, enabling AlphaGo to self-train by playing against itself and refining strategies without human intervention. |

|

Action Layer |

Selected moves that maximized the probability of winning, balancing both offensive and defensive tactics dynamically. |

The core architecture of AlphaGo combined:

- Deep Neural Networks to recognize board patterns and evaluate board positions.

- Policy Networks to suggest promising next moves.

- Value Networks to assess the winning potential from any given board state.

- Monte Carlo Tree Search to explore and evaluate sequences of future moves efficiently.

Outcome

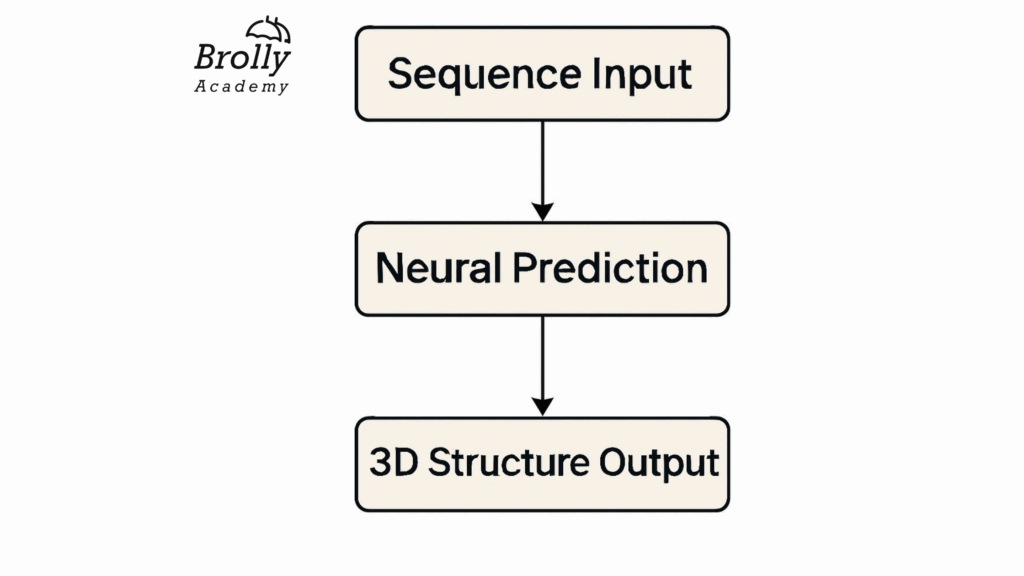

AlphaFold: Structuring AI to Solve Biology’s Grand Challenge

Structural Approach Behind AlphaFold

DeepMind structured AlphaFold with a design significantly different from AlphaGo but equally intelligent:

- Attention-Based Neural Networks: Inspired by the success of transformers in Natural Language Processing (NLP), AlphaFold used deep attention mechanisms to model the relationships between amino acids in a sequence.

- Biological Domain Knowledge Integration: Unlike purely data-driven approaches, AlphaFold incorporated known biological principles (like evolutionary relationships and physical constraints) directly into its learning architecture.

- End-to-End Differentiable Learning: The system was trained in an end-to-end manner, meaning that prediction errors were backpropagated through the entire model, allowing every layer to improve jointly.

- Continuous Feedback Loops: AlphaFold’s architecture allowed iterative refinement, predicting intermediate structures and correcting them until convergence.

Outcome

FAQ : Structure of Artificial Intelligence

What does "structure" mean in Artificial Intelligence?

Why is AI structure important?

What are the main components of an AI system structure?

How do agents fit into AI structure?

What are emerging trends in AI architecture?

Can AI structure change during its lifetime?

What is the role of Machine Learning in AI structure?

Are all AI systems built the same way?

How does data flow affect AI structure?

What is modularity in AI structure?

How does AI structure impact explainability?

What is the difference between centralized and decentralized AI structures?

Why is feedback important in AI structures?

What is a hierarchical AI structure?

How does AI structure relate to ethical AI development?

Final Conclusion: Mastering the Structure of Artificial Intelligence

Artificial Intelligence is no longer a mystery for a few researchers tucked away in labs.

It is shaping industries, economies, healthcare, education, and daily personal lives.

Understanding the structure of Artificial Intelligence is essential because it allows us to:

- Build smarter, safer, more adaptable AI systems

- Align AI behavior with human goals and ethical principles

- Accelerate innovation responsibly and sustainably

- Control, debug, and improve AI performance in real-world deployments

As we move into a future where AI will not just assist but increasingly collaborate with humans, the architects of tomorrow’s AI must be those who deeply understand how these systems are structured. Mastering the principles outlined in this guide is the first step toward becoming one of those architects.