Introduction to machine learning with python

- Machine Learning with Python is a highly in-demand skill that allows computers to learn from data and make smart decisions automatically.

- Python is the preferred language for machine learning because of its simple syntax and powerful libraries like Scikit-learn, Pandas, and TensorFlow.

- It’s beginner-friendly and ideal for building real-world applications like recommendation systems, fraud detection models, and chatbots.

- Learning Machine Learning with Python gives you a strong foundation in modern tech careers across industries like finance, healthcare, e-commerce, and social media.

- At Brolly Academy, we offer a hands-on training program designed to make you job-ready.

- The course covers all core concepts including supervised and unsupervised learning, classification, regression, and model evaluation. You’ll get practical exposure through real-time projects guided by experienced trainers.

- Our Machine Learning with Python course is perfect for students, job seekers, and working professionals.

- Along with quality training, Brolly Academy also supports your career with mock interviews, resume preparation, and placement assistance.

- Brolly Academy is a top-rated training institute in Hyderabad, known for its industry-focused courses in digital marketing, data science, and programming.

- With expert trainers, hands-on projects, flexible learning modes, and strong placement support, Brolly empowers students and professionals with real-world skills to succeed in today’s competitive tech world.

1. Introduction to machine learning with python

3. Overview of Machine Learning

- Overview of Machine Learning

- What is Machine Learning?

- Machine Learning (ML), a core branch of Artificial Intelligence (AI), enables computers to learn from data and improve performance without manual programming. Instead of following fixed rules, machine learning systems identify patterns and make predictions or decisions based on data.

- At its core, machine learning involves feeding large amounts of data into algorithms, which then analyze and extract useful insights. These algorithms can “learn” from new data continuously, adapting their behavior to improve accuracy and efficiency. Common types of machine learning include:

- Supervised Learning: The model is trained on labeled data (input with known output), such as predicting house prices based on features like size and location.

- Unsupervised Learning: The model identifies hidden patterns or groupings in data without predefined labels, such as customer segmentation.

- Reinforcement Learning: The model learns by interacting with its environment and receiving feedback in the form of rewards or penalties.

- Machine learning powers many technologies we use daily, including voice assistants, recommendation systems, fraud detection, and self-driving cars. It plays a key role in fields like healthcare, finance, marketing, and robotics.

- As data continues to grow, machine learning will remain at the forefront of intelligent, data-driven decision-making.

- History and Evolution of Machine Learning

- The history of machine learning dates back to the mid-20th century, when computer scientists first began exploring how machines could simulate human learning. In 1959, Arthur Samuel coined the term machine learning while working on a program that played checkers and improved its strategy through experience.

- In the 1960s and 70s, early pattern recognition and statistical models laid the foundation for supervised learning. However, limited computing power and data availability slowed progress. The 1980s saw the rise of neural networks, particularly the backpropagation algorithm, which allowed systems to learn more complex patterns.

- The real breakthrough came in the 2000s with the explosion of big data and advances in cloud computing, making it possible to train more sophisticated models on massive datasets. This period marked the rise of support vector machines, decision trees, and ensemble methods.

- Today, machine learning has evolved into a powerful field within artificial intelligence, with applications in voice assistants, recommendation engines, healthcare diagnostics, and autonomous vehicles. Deep learning, a subfield of machine learning, has further revolutionized areas like natural language processing and computer vision.

- As technology continues to advance, the evolution of machine learning will drive innovation across industries, shaping the future of how machines learn and adapt.

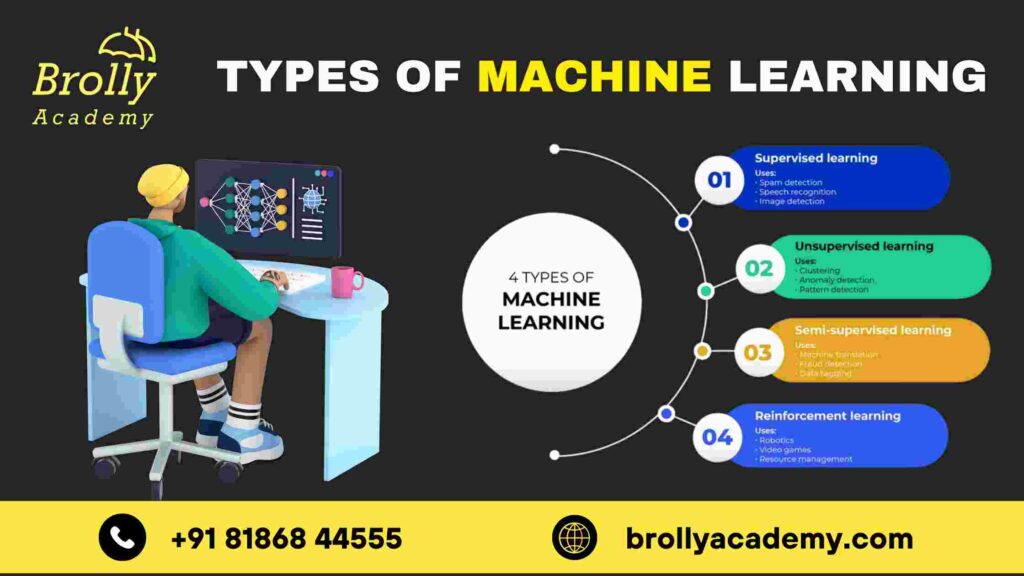

- Types of Machine Learning

Machine Learning (ML) is broadly categorized into three main types, each with distinct learning methods and use cases:

1. Supervised Learning

Supervised learning involves training a model on a labeled dataset, where both inputs and outputs are known. The algorithm learns to map inputs to the correct output and is used for tasks like classification (e.g., spam detection) and regression (e.g., predicting house prices). Some of the most popular machine learning algorithms include Linear Regression for prediction, Decision Trees for classification, and Support Vector Machines (SVMs) for handling complex data separation.

2. Unsupervised Learning

In unsupervised learning, the model is given unlabeled data and must identify patterns or groupings on its own. It’s widely used for clustering (e.g., customer segmentation) and association (e.g., market basket analysis). Key algorithms include K-Means Clustering, Hierarchical Clustering, and Apriori.

3. Reinforcement Learning

Reinforcement learning is based on a reward system.Reinforcement learning involves an agent interacting with an environment, taking actions, and learning from feedback through rewards or penalties to improve performance. It’s commonly used in robotics, gaming, and autonomous systems. Key reinforcement learning algorithms include Q-Learning and Deep Q-Networks (DQN).

These types form the foundation of machine learning applications that power intelligent systems across industries like healthcare, finance, marketing, and automation.

- Key Concepts and Terminology

Understanding machine learning (ML) begins with mastering its key concepts and terms. These foundational ideas are critical for building, training, and evaluating ML models.

- Algorithm: A structured set of rules or instructions that enables machines to learn patterns and make decisions based on data.

- Model: The output created after the machine has been trained using a learning algorithm and data. Algorithms power data-driven predictions and decisions across diverse applications, from healthcare to finance and marketing.

- Training Data: The dataset used to “teach” the model, containing input-output pairs (in supervised learning).

- Test Data: A separate dataset used to evaluate how well the model performs on unseen data.

- Features: Input variables or attributes (e.g., age, salary) used by machine learning models to make accurate predictions.

- Labels: The target output the model is trying to predict (only in supervised learning).

- Overfitting: When a model learns the training data too well, including noise, and performs poorly on new data.

- Underfitting: Underfitting occurs when a machine learning model is too simplistic to capture the underlying patterns in the data, resulting in low accuracy and poor predictive performance.

- Accuracy, Precision, Recall: Metrics used to evaluate model performance.

- Learning Rate: A parameter that controls how much the model updates during training.

Mastering these terms helps in understanding how ML systems learn, improve, and are applied across various real-world scenarios.

- Introduction to Python for Machine Learning

Python is one of the most popular programming languages used in machine learning due to its simplicity, readability, and vast ecosystem of libraries and tools. It is beginner-friendly and widely supported by the data science and AI community, making it the go-to language for both new learners and experienced developers.

What makes Python ideal for machine learning is its rich collection of libraries such as:

- NumPy and Pandas are core Python libraries widely used in data science and machine learning for efficient numerical computation, data analysis, and manipulation.

- Matplotlib and Seaborn for data visualization,

- Scikit-learn for implementing standard machine learning algorithms,

- TensorFlow and PyTorch are powerful deep learning frameworks commonly used for building, training, and deploying neural networks in machine learning and AI projects.

- Python supports rapid prototyping and experimentation, making it a vital tool in efficient machine learning workflows. Whether you’re cleaning datasets, training models, evaluating performance, or deploying solutions, Python provides efficient and flexible tools for every step.

- Additionally, Python integrates easily with web frameworks, cloud services, and databases, making it powerful for building and scaling real-world AI applications.

- In summary, Python’s versatility, extensive library support, and active community make it an essential language for anyone looking to start or grow in the field of machine learning.

2. Types of Machine Learning: Supervised, Unsupervised, and Reinforcement Learning

- Introduction to Machine Learning

- Machine Learning (ML) is a subset of artificial intelligence (AI) that enables computers to learn from data and make decisions or predictions without being explicitly programmed. Instead of following rigid instructions, machine learning models use algorithms to detect patterns, improve performance, and adapt over time.

- The concept is simple yet powerful: the more data a machine receives, the better it becomes at identifying trends and making accurate predictions. This makes machine learning an essential tool for solving complex, data-driven problems across industries such as healthcare, finance, marketing, e-commerce, and autonomous systems.

There are several types of machine learning:

- Supervised Learning: The model learns from labeled data.

- Unsupervised Learning: Unsupervised learning is a machine learning approach where the model discovers hidden patterns, structures, or groupings within unlabeled data without predefined outputs.

- Reinforcement Learning: Reinforcement learning is a type of machine learning where a model learns optimal actions through trial and error by interacting with an environment and receiving feedback in the form of rewards or penalties.

- Machine learning powers many everyday technologies like voice assistants, recommendation engines, fraud detection systems, and even self-driving cars. It is also at the heart of predictive analytics, customer segmentation, and automation tools.

- With the exponential growth of data and computing power, machine learning is rapidly shaping the future of technology. Whether you’re a beginner or an experienced developer, understanding machine learning is key to staying competitive in the digital age.

- Understanding Supervised Learning

Supervised learning is a core type of machine learning where a model is trained using a labeled dataset—that is, data that includes both input variables and known output values (also called labels). The goal is to train the algorithm to understand the relationship between inputs and outputs, enabling accurate predictions on new, unseen data.

Supervised learning is commonly used for two main tasks:

- Classification: Predicting discrete labels or categories. For example, classifying emails as spam or not spam using labeled data in a supervised learning model.

- Regression: Predicting continuous values. For example, forecasting house prices based on features like size, location, and age.

Popular supervised learning algorithms include:

- Linear Regression

- Logistic Regression

- Decision Trees

- Support Vector Machines (SVM)

- k-Nearest Neighbors (k-NN)

The process involves splitting the dataset into training and testing sets, allowing the model to learn patterns from one part and be evaluated on another. The model’s performance is measured using metrics like accuracy, precision, recall, F1-score, or mean squared error, depending on the task.

Supervised learning is widely used in real-world applications such as email filtering, customer churn prediction, fraud detection, and medical diagnosis, making it one of the most practical and powerful machine learning techniques.

- Common Algorithms in Supervised Learning

Supervised learning algorithms are designed to learn from labeled data, where both input features and output labels are known. These algorithms are widely used for solving classification and regression problems. Below are some of the most commonly used supervised learning algorithms:

1. Linear Regression

Used for predicting continuous values, such as sales or prices, by modeling the relationship between independent variables and a dependent variable.

2. Logistic Regression

Ideal for binary classification tasks (e.g., spam vs. non-spam), logistic regression predicts the probability of an outcome based on input features.

3. Decision Trees

These models split the data into branches to reach predictions, making them easy to understand and interpret. Useful for both classification and regression.

4. Support Vector Machines (SVM)

Support Vector Machines (SVMs) are powerful supervised learning algorithms that identify the optimal hyperplane to effectively separate different data classes in high-dimensional feature spaces.They are highly effective for complex classification tasks.

5. k-Nearest Neighbors (k-NN)

This algorithm classifies new data points based on the majority label of their ‘k’ closest neighbors. It is a simple yet powerful algorithm capable of delivering strong performance across a wide range of machine learning tasks.

6. Random Forest

Random Forest is a robust ensemble learning algorithm that builds multiple decision trees and aggregates their outputs to boost predictive accuracy, reduce overfitting, and enhance overall model reliability.

These algorithms form the backbone of many real-world applications in healthcare, finance, marketing, and more.

- Applications of Supervised Learning

Supervised learning is one of the most widely used machine learning techniques due to its ability to deliver accurate and actionable predictions. It plays a vital role in a variety of industries, helping organizations solve complex problems through data-driven insights.

1. Email Spam Detection

Supervised learning models like Naive Bayes and Logistic Regression are used to classify emails as spam or non-spam based on past labeled examples.

2. Fraud Detection

In the finance sector, supervised algorithms help detect fraudulent transactions by learning patterns from historical fraud cases and flagging suspicious activity.

3. Customer Churn Prediction

Businesses use models to identify which customers are likely to leave based on usage behavior, allowing targeted retention strategies.

4. Medical Diagnosis

In healthcare, supervised learning is used to predict diseases or conditions (e.g., diabetes, cancer) based on patient data like symptoms, age, and lab results.

5. Credit Scoring

Banks and financial institutions assess loan eligibility and risk by analyzing applicant data with supervised models like Decision Trees and SVMs.

6. Image and Speech Recognition

Supervised learning helps classify images (e.g., object detection) and transcribe speech to text using labeled datasets.

These applications demonstrate the real-world power and versatility of supervised learning in driving smarter, faster decisions.

- Understanding Unsupervised Learning

Unsupervised learning is a type of machine learning where algorithms analyze and interpret data without labeled outputs. Unlike supervised learning, where the model is trained on input-output pairs, unsupervised learning explores the underlying structure of the data to discover patterns, groupings, or relationships on its own.

The two most common tasks in unsupervised learning are:

- Clustering: Grouping similar data points together based on their features. For example, customer segmentation in marketing, where users with similar behavior are grouped into clusters.

- Dimensionality Reduction: Simplifying large datasets by reducing the number of features while preserving essential patterns. “Techniques such as Principal Component Analysis (PCA) are commonly used for data visualization and noise reduction in machine learning.

Popular unsupervised learning algorithms include:

- K-Means Clustering

- Hierarchical Clustering

- DBSCAN (Density-Based Spatial Clustering)

- Apriori (used for market basket analysis)

Unsupervised learning is widely used in data mining, anomaly detection, recommendation systems, image compression, and genomic data analysis. Since no labels are required, it’s especially useful when labeled data is unavailable or too costly to obtain.

In essence, unsupervised learning helps uncover hidden insights in data, making it a powerful tool for exploratory analysis and decision-making in data science.

3. Key Concepts in Machine Learning: Features, Labels, and Models

- Overview of Machine Learning

Machine Learning (ML), a powerful subset of Artificial Intelligence (AI), enables systems to learn from data, detect patterns, and make intelligent decisions with minimal human input. It plays a vital role in today’s technology-driven world, powering applications like recommendation systems, fraud detection, image recognition, and predictive analytics. ML algorithms are typically categorized into supervised, unsupervised, and reinforcement learning, each suited for specific types of problems. Tools like Python, TensorFlow, Scikit-learn, and Pandas are widely used in ML development. By leveraging large datasets, ML models can continuously improve accuracy and performance. As industries adopt digital transformation, machine learning becomes crucial for gaining insights, automating tasks, and enhancing decision-making. Its ability to analyze vast data efficiently makes ML a cornerstone of modern data science and AI solutions.

- Understanding Features in Machine Learning

Features in machine learning are the input variables or attributes that models use to learn patterns and make predictions. They represent the measurable properties of the data, such as age, income, product type, or temperature, depending on the context. Choosing the right features is critical to building accurate and efficient models. This process, known as feature selection, helps eliminate irrelevant or redundant data that could reduce performance. Additionally, feature engineering focuses on creating new features or modifying existing ones to improve a machine learning model’s performance and accuracy. Good features directly impact how well an algorithm understands the relationship between input and output data. In supervised learning, features help predict outcomes, while in unsupervised learning, they aid in finding structure or clusters within the data. Tools such as Pandas, NumPy, and Scikit-learn are widely used for processing, refining, and optimizing features in machine learning workflows. Ultimately, understanding and optimizing features is essential for building high-performing, data-driven machine learning models.

- Defining Labels and Their Importance

In machine learning, labels are the target values or outputs that models learn to predict based on input features. In supervised learning, each data point is associated with a label, helping the algorithm learn the relationship between inputs (features) and outputs (labels). For example, in a spam detection system, the label indicates whether an email is “spam” or “not spam.” Labels are crucial for training accurate models, as they serve as the ground truth during the learning process. Without labeled data, a model cannot assess its performance or adjust its predictions. In classification tasks, labels are discrete categories, while in regression tasks, they are continuous values. Properly defined and high-quality labels ensure effective training and better predictive outcomes. In summary, labels play a central role in teaching models how to generalize from known data to make predictions on new, unseen data.

- Types of Machine Learning Models

Machine learning models are broadly categorized into supervised, unsupervised, and reinforcement learning—each designed to solve specific types of problems using different learning approaches. In supervised learning, models are trained on labeled data, where both input and output are known. Common algorithms include Linear Regression, Decision Trees, and Support Vector Machines (SVMs), used for tasks like classification and regression.

Unsupervised learning focuses on analyzing unlabeled data to uncover hidden patterns, structures, or groupings within the dataset. Popular models include K-Means Clustering, Hierarchical Clustering, and Principal Component Analysis (PCA), often used for customer segmentation and data compression.

Reinforcement learning involves training an agent to make decisions by interacting with an environment and learning from rewards or penalties. Algorithms like Q-Learning and Deep Q-Networks (DQN) are commonly used in robotics, gaming, and self-driving technologies.

Each model type serves specific applications, and choosing the right one depends on the problem, data availability, and desired outcomes.

- Introduction to Python for Machine Learning

Python is the most widely used programming language for machine learning, thanks to its simplicity, readability, and rich ecosystem of libraries. It enables developers and data scientists to efficiently build, train, and deploy machine learning models for diverse real-world applications across industries. Python’s intuitive syntax allows beginners to focus on learning machine learning concepts without getting overwhelmed by complex code.

Key libraries like NumPy and Pandas support numerical computations and data manipulation, while Matplotlib and Seaborn offer powerful data visualization tools. For building models, libraries such as Scikit-learn, TensorFlow, and PyTorch provide robust frameworks for everything from basic algorithms to deep learning and neural networks.

Python also supports rapid prototyping, enabling quick experimentation and iteration—essential for machine learning workflows. The language integrates easily with databases, web frameworks, and big data tools, making it highly versatile for end-to-end solutions.

With strong community support, abundant tutorials, and open-source tools, Python empowers users at all skill levels to explore machine learning. Whether you’re working on image recognition, natural language processing, or predictive analytics, Python offers the flexibility and power to turn data into actionable insights.

4. Data Preprocessing with Python: Cleaning and preparing data

- Understanding Data Preprocessing

Data preprocessing is an important step in machine learning where raw data is cleaned and organized so it can be used to build models. Real-world data is often incomplete, inconsistent, or contains noise, which can negatively affect model performance. Preprocessing tasks include handling missing values, removing duplicates, encoding categorical variables, feature scaling, and normalization. This ensures that the data is consistent and that algorithms can learn effectively from it. Tools like Pandas, NumPy, and Scikit-learn are commonly used for efficient data cleaning and preparation. Proper preprocessing helps improve model accuracy, training speed, and overall performance. Without data preprocessing, even the best machine learning algorithms can give poor results. Understanding and applying the right preprocessing techniques is essential for building reliable and high-performing machine learning models.

- Importance of Data Cleaning

Data cleaning is a vital step in the data preprocessing process, ensuring that datasets are accurate, consistent, and free of errors before feeding them into machine learning models. Raw data often contains missing values, duplicates, inaccurate entries, or outliers, which can lead to misleading results and reduced model accuracy. By removing or correcting such issues, data cleaning enhances the quality and reliability of the dataset, enabling algorithms to learn more effectively. Clean data also helps in improving model performance, training efficiency, and overall predictive accuracy. Tools like Pandas, OpenRefine, and Scikit-learn have strong features that help clean and change data easily. Ignoring this step can result in biased insights, faulty decisions, and poor business outcomes. That’s why data cleaning is a key step for successful machine learning and making good decisions based on data.

- Common Data Cleaning Techniques

Data cleaning is an important step in getting data ready for machine learning and analysis. Common techniques include removing duplicates to avoid redundancy and ensure accuracy. Handling missing values is another key step—this can involve deleting rows, filling in with mean/median, or using interpolation. Outlier detection and removal helps maintain data integrity by eliminating values that distort analysis. Making formats like dates and categories the same throughout the data helps keep everything consistent. Encoding categorical variables (e.g., one-hot or label encoding) prepares non-numeric data for modeling. Removing irrelevant or noisy data improves focus on meaningful features. Additionally, scaling and normalization align numerical data ranges for better algorithm performance. Pandas, NumPy, and Scikit-learn are useful tools that make data preprocessing quick and simple. Implementing these techniques enhances data quality, leading to more accurate and reliable machine learning models.

- Handling Missing Values

Handling missing values is a critical step in the data cleaning process, as missing data can negatively impact the performance and accuracy of machine learning models. Common techniques include deletion, where rows or columns with missing values are removed if the missing percentage is low. Imputation is another widely used method—missing values can be filled in using the mean, median, or mode of a feature. For time-based or ordered data, methods like forward fill or interpolation help keep the data continuous and complete. Advanced methods like K-Nearest Neighbors (KNN) imputation or predictive modeling are also used when patterns in data can estimate missing entries. The choice of method depends on the data type and context. Properly handling missing values ensures cleaner datasets, improves model training, and reduces biases—making it an essential part of data preprocessing in machine learning workflows.

- Dealing with Outliers

Dealing with outliers is an important aspect of data preprocessing in machine learning. Outliers are unusual data points that are very different from the rest and can affect model accuracy by giving misleading results. Common techniques for detecting outliers include visualization tools like box plots and scatter plots, as well as statistical methods such as Z-score analysis and Interquartile Range (IQR). Once identified, outliers can be removed, transformed, or capped (e.g., using winsorization). Sometimes outliers can be important, so deciding how to handle them depends on expert knowledge and the specific problem. For sensitive models like linear regression, removing outliers can improve accuracy, while robust models like decision trees may handle them better. Managing outliers effectively leads to cleaner, more reliable data and enhances the overall performance of machine learning algorithms.

5. Exploratory Data Analysis (EDA) with Python

- Introduction to Exploratory Data Analysis

Exploratory Data Analysis (EDA) is the process of analyzing and summarizing datasets to uncover patterns, spot anomalies, test hypotheses, and check assumptions before applying machine learning models. It helps data scientists understand the structure and meaning of data through both statistical techniques and visual tools. EDA typically includes examining distributions, relationships between variables, missing values, and outliers. Visualizations like histograms, box plots, scatter plots, and correlation matrices are commonly used to make data insights clearer. Performing EDA is crucial for spotting data quality issues and choosing the most relevant features for accurate machine learning models. Without proper EDA, important trends or errors may go unnoticed, leading to poor model performance. It is a foundational step in any data science project, guiding smarter decisions in data cleaning, transformation, and model building.

- Key Concepts of EDA

Key concepts of Exploratory Data Analysis (EDA) focus on understanding the data before building machine learning models. The process starts with data summarization, including measures like mean, media, mode, and standard deviation to understand central tendencies and variability. Data visualization is another core element—tools like histograms, box plots, and scatter plots help reveal patterns, trends, and relationships. Missing value analysis is crucial for identifying gaps in the dataset that may impact model accuracy. Outlier detection helps find extreme values that can skew results. Correlation analysis shows how features relate to each other, which helps in feature selection. EDA also involves checking data types, distribution shapes, and class imbalances. These insights guide better preprocessing, feature engineering, and modeling decisions. By mastering these key EDA concepts, data scientists ensure more accurate, efficient, and informed machine learning workflows.

- Importance of EDA in Machine Learning

Exploratory Data Analysis (EDA) plays a critical role in machine learning by helping data scientists understand the underlying structure and quality of the data before modeling. EDA uncovers important patterns, trends, and relationships between variables that guide feature selection and model choice. It also helps identify issues like missing values, outliers, and inconsistent data types that can negatively affect model performance. By using statistical summaries and visualizations (e.g., box plots, histograms, correlation heatmaps), EDA ensures cleaner, more accurate input for algorithms. EDA reduces the risk of overfitting or underfitting by providing a clear picture of data behavior. Without proper EDA, critical insights can be missed, leading to poor model accuracy and unreliable predictions. In short, EDA builds a solid foundation for successful machine learning by turning raw data into meaningful insights.

- Getting Started with Python for EDA

Getting started with Python for Exploratory Data Analysis (EDA) is easy, thanks to its powerful libraries and user-friendly syntax. Tools like Pandas allow you to load, inspect, and manipulate data efficiently. With NumPy, you can perform numerical operations, while Matplotlib and Seaborn help create insightful visualizations like histograms, box plots, and scatter plots. Begin by importing your dataset using Pandas and exploring basic statistics with .describe(), .info(), and .value_counts(). Use visual tools to identify distributions, missing values, and outliers. Correlation heatmaps can help spot relationships between features. Python’s flexibility and extensive community support make it ideal for beginners and experts alike. Whether you’re working with a CSV file or a large dataset, Python provides everything you need to explore and understand your data—making it the perfect starting point for any machine learning project.

- Data Visualization Techniques in EDA

Data visualization is a key component of Exploratory Data Analysis (EDA), helping analysts and data scientists uncover patterns, trends, and anomalies in datasets. Effective visualizations simplify complex data, making insights more accessible and actionable. Common techniques include histograms for understanding the distribution of numerical features and box plots to detect outliers and compare distributions. Scatter plots help visualize relationships between two continuous variables, revealing trends or correlations.

Pair plots extend this by displaying multiple variable relationships in one view, making it easier to spot patterns across features. Bar charts are great for showing categorical data, making it easy to spot the most common categories and find any missing or rare values. Heatmaps, especially those showing correlation matrices, are valuable for understanding feature relationships and detecting multicollinearity—when variables are too closely related.

Line plots are commonly used in time series analysis to observe data trends, patterns, and fluctuations over time. These data visualization techniques are essential in Exploratory Data Analysis (EDA), providing clear, visual insights that guide data cleaning, feature selection, and model design in machine learning workflows. Tools like Matplotlib, Seaborn, and Plotly in Python make creating these visualizations simple and customizable. Incorporating these techniques during EDA ensures a deeper understanding of the dataset, supports better feature selection, and ultimately improves machine learning model performance.

6. Introduction to Popular Python Libraries: Scikit-learn, Pandas, Numpy, and Matplotlib

- Overview of Python for Machine Learning

Python is widely used in machine learning due to its simple syntax, easy readability, and robust library support, making it ideal for both beginners and professionals.It enables developers and data scientists to efficiently build, train, and deploy machine learning models across various domains like healthcare, finance, marketing, and more. Python’s library ecosystem is diverse and powerful: use NumPy and Pandas for data manipulation, Matplotlib and Seaborn for visualization, and Scikit-learn to run classic machine-learning algorithms. For deep learning tasks, TensorFlow and PyTorch provide robust tools for neural network development.

Python supports rapid prototyping, making it ideal for testing and improving models quickly. With vast community support, open-source resources, and strong integration capabilities, Python has become the go-to language for machine learning projects. Whether you’re a beginner or an expert, Python provides the flexibility and power needed to turn data into actionable insights.

- Introduction to Scikit-learn

Scikit-learn is a widely used open-source Python library for machine learning, built on top of NumPy, SciPy, and Matplotlib, offering efficient tools for data analysis and modeling.. It offers easy-to-use tools for data analysis, mining, and predictive modeling. Scikit-learn supports a wide range of supervised and unsupervised learning algorithms, including linear regression, decision trees, random forests, support vector machines (SVMs), clustering, and dimensionality reduction. Its consistent and easy-to-use API makes it ideal for both beginners and experienced data scientists.

With built-in tools for model selection, cross-validation, feature extraction, and preprocessing, Scikit-learn streamlines the machine learning workflow from start to finish. It is perfect for developing prototypes and testing models quickly. Whether you’re working on classification, regression, or clustering tasks, Scikit-learn offers the flexibility and power needed to build high-performing machine learning solutions in Python.

- Key Features of Scikit-learn

Scikit-learn offers a rich set of features that make it a powerful and user-friendly library for machine learning in Python. Some of its key features include:

- Scikit-learn supports a wide range of algorithms, including classification, regression, clustering, and dimensionality reduction—such as SVM, Random Forest, K-Means, and PCA.

- Consistent and simple API: Easy-to-understand syntax, making model training and evaluation straightforward.

- Data preprocessing tools: Includes scaling, normalization, encoding categorical variables, and handling missing values.

- Model selection and evaluation: Built-in support for cross-validation, grid search, and performance metrics like accuracy, precision, and recall.

- Feature selection and extraction: Feature selection and extraction in Scikit-learn help boost model performance by identifying and using the most relevant features in the dataset.

- Integration with other libraries: Scikit-learn integrates smoothly with libraries like NumPy, Pandas, and Matplotlib, enabling efficient data handling, analysis, and visualization in machine learning workflows.

Scikit-learn is a top choice for both beginners and experts aiming to build reliable, scalable, and efficient machine learning models.

- Common Algorithms in Scikit-learn

Scikit-learn offers a wide range of machine learning algorithms that are easy to implement and widely used in both academic and industry projects. Some of the most common algorithms include:

- Linear Regression – Used for predicting continuous values.

- Logistic Regression is well-suited for both binary and multi-class classification problems, making it a fundamental algorithm in supervised learning.

- Decision Trees – Decision Trees are intuitive yet powerful models used for both classification and regression tasks, offering easy interpretation and effective performance.

- Random Forest – Random Forest is a powerful ensemble learning algorithm that enhances prediction accuracy and reduces overfitting by aggregating the results of multiple decision trees.

- Support Vector Machines (SVM) are highly effective for classification tasks, especially in high-dimensional spaces where they find the optimal boundary between classes.

- K-Nearest Neighbors (KNN) is a simple, non-parametric algorithm used for both classification and regression by making predictions based on the closest data points.

- Naive Bayes – Based on Bayes’ Theorem, commonly used for text classification.

- K-Means Clustering is a widely used unsupervised learning algorithm that groups data into distinct clusters based on similarity.

These algorithms in Scikit-learn come with built-in tools for training, testing, and tuning, making model development fast and reliable.

- Introduction to Pandas

Pandas is a powerful open-source Python library designed for data analysis and data manipulation. Built on top of NumPy, it provides flexible and easy-to-use data structures like Series (1D) and DataFrame (2D), allowing users to handle structured data efficiently.

Pandas is widely used in data science, machine learning, and statistical analysis for tasks such as data cleaning, transformation, aggregation, and visualization. With intuitive syntax and powerful functions, you can easily load data from various file formats like CSV, Excel, JSON, or SQL, and perform operations like filtering, grouping, merging, and reshaping. Whether you’re exploring a dataset or preparing data for modeling, Pandas streamlines the workflow and saves time. It integrates well with other Python libraries like Matplotlib, Seaborn, and Scikit-learn, making it a cornerstone tool for anyone working with data in Python.

Explore the embedded software development process and essential tools like editors, compilers, and debuggers at Brolly Academy. Get practical experience in building efficient embedded systems. Enroll now

Conclusion

Machine Learning with Python is one of the most accessible and powerful tools for breaking into artificial intelligence (AI) and data science, enabling beginners and professionals alike to build intelligent systems efficiently. As data continues to grow at an exponential pace, understanding machine learning is no longer optional—it’s essential. Whether you’re a beginner eager to learn how algorithms work or a professional looking to scale data-driven solutions, Python provides the perfect ecosystem to build, train, and deploy machine learning models.

Python is the go-to language for machine learning because of its simplicity, community support, and vast library ecosystem. Libraries like NumPy, Pandas, and Matplotlib make data analysis and visualization effortless. Scikit-learn provides robust tools for implementing classic ML algorithms like decision trees, support vector machines (SVMs), k-nearest neighbors (k-NN), and random forests. For deep learning, frameworks such as TensorFlow and PyTorch empower developers to build complex neural networks for tasks involving natural language processing and computer vision.

Scikit-learn, in particular, stands out for its user-friendly API and consistent syntax, making it ideal for prototyping, training, evaluating, and scaling ML models efficiently. Coupled with Pandas and Numpy for data manipulation, and visualization tools like Matplotlib and Seaborn, Python enables seamless development from raw data to actionable insights.

In today’s data-driven world, machine learning with Python is transforming industries such as healthcare, finance, marketing, and e-commerce. From fraud detection systems and recommendation engines to predictive analytics and self-driving cars, machine learning is at the core of innovation.

By mastering the fundamentals of machine learning and leveraging Python’s flexible tools, individuals and businesses alike can unlock smarter decisions, improved customer experiences, and a significant competitive edge. Whether you’re working on a small research project or a full-scale production system, Python and machine learning offer limitless possibilities.

Start your journey today, and harness the power of machine learning with Python to shape the future.

FAQ’s

What is machine learning?

Machine learning is a field of AI that enables computers to learn from data and make decisions or predictions without being explicitly programmed.

Why is Python popular for machine learning?

What are the main types of machine learning?

Supervised learning, unsupervised learning, and reinforcement learning.

What is supervised learning?

What is unsupervised learning?

Unsupervised learning finds patterns and structures in unlabeled data.

What is reinforcement learning?

What is Scikit-learn used for?

Scikit-learn is a Python library used for implementing machine learning algorithms and data preprocessing.

What is a feature in machine learning?

A feature is an input variable used to train models, like age or income.

What is a label in machine learning?

What is overfitting?

What is underfitting?

What is a decision tree?

What is linear regression?

A technique to predict continuous numerical outcomes using linear relationships.

What is logistic regression used for?

It is used for binary classification tasks like spam detection.

What is k-nearest neighbors (k-NN)?

An algorithm that classifies data based on the closest examples in the dataset.

What is a support vector machine (SVM)?

SVM is a powerful classification algorithm that finds the best boundary between classes.

What is a random forest?

What is data preprocessing?

It’s the process of cleaning and organizing raw data before model training.

How do you handle missing values in data?

By deleting, imputing with mean/median, or using prediction-based imputation methods.

What is exploratory data analysis (EDA)?

EDA involves summarizing and visualizing data to uncover patterns before modeling.

What are common EDA tools in Python?

What is feature scaling?

What is the difference between classification and regression?

What is clustering?

Clustering is grouping similar data points using unsupervised learning.