Introduction to AI and Machine Learning

Introduction

Chances are, you’ve interacted with Artificial Intelligence (AI) and Machine Learning (ML) today — without even thinking about it.

But what exactly are AI and Machine Learning?

And why do they matter so much right now?

What is Artificial Intelligence (AI)?

Imagine you’re teaching a dog how to fetch a ball.

At first, the dog doesn’t understand. You throw the ball, and it looks at you in confusion. Over time, through repeated encouragement and training, the dog starts to recognize patterns. Eventually, it learns: ball thrown = go fetch.

Now replace the dog with a computer.

Replace the ball with a task — like recognizing a human face in a photo.

Replace your encouragement with data and algorithms.

Definition (in simple words):

Artificial Intelligence is the ability of a machine to perform tasks that normally require human intelligence — like understanding language, recognizing images, solving problems, or even making decisions.

Key areas where AI operates:

- Learning from experience (data)

- Reasoning and making logical choices

- Adapting to new situations

- Understanding speech, text, or visuals

- Planning actions or predicting outcomes

Real-World Examples You See Every Day:

- Your iPhone’s Face ID unlocking your phone.

- YouTube automatically suggesting videos you might enjoy.

- Chatbots helping you book tickets or answer questions online.

- Google Photos organizing your pictures by people, places, or events.

In short : AI doesn’t just follow hardcoded instructions. It thinks, learns, and adapts.

What is Machine Learning (ML)?

Imagine This

You run a bakery. You notice that customers buy more chocolate cake on rainy days. At first, you don’t understand why, but after seeing this trend repeatedly, you start preparing more chocolate cakes when rain is forecast.

That’s pattern recognition. Similarly, a machine can be trained to observe thousands of patterns in huge datasets — way faster and deeper than a human ever could — and use those insights to predict future behavior.

How Machine Learning Works

- Input Data: Tons of examples are given to the computer (like 1 million pictures of dogs and cats).

- Training: The machine looks for hidden patterns (like pointy ears = cat).

- Prediction: The machine tries to predict: “Is this a cat or a dog?” when shown a new picture.

- Feedback: If it’s wrong, it adjusts itself, becoming more accurate over time.

Quick Real-World Machine Learning Examples:

- Netflix: Recommends shows based on what you watched before.

- Google Search: Learns which links you’re more likely to click on.

- Email: Filters spam by recognizing patterns common in spam messages.

- Credit Cards: Banks detect fraud by flagging purchases that don’t match your usual behavior.

Why is Machine Learning Important for AI?

Without Machine Learning, AI would stay frozen — like a machine following a fixed set of rules forever. Machine Learning makes AI systems dynamic, self-improving, and adaptable. They don’t just rely on pre-programmed rules; they evolve. In simple terms: Machine Learning is the fuel that powers most modern AI systems.

How is AI Different from Machine Learning?

- AI is the broader concept — the dream of machines being able to simulate human intelligence.

- ML is a specific approach — a practical method to achieve AI by teaching machines to learn from data.

Simple Analogy

Imagine a big tree.

- The entire tree is Artificial Intelligence — the overall goal of making machines intelligent.

- One strong branch of the tree is Machine Learning — a specific technique to help reach that goal.

Without Machine Learning, many modern AI applications would not exist today.

In fact, Machine Learning powers 90% of the AI we interact with daily!

Key Differences

|

Feature |

Artificial Intelligence (AI) |

Machine Learning (ML) |

|

Definition |

Making machines simulate human intelligence |

Teaching machines to learn from data |

|

Scope |

Very broad |

Specific subset of AI |

|

Goal |

Create intelligent behavior |

Improve from experience without explicit programming |

|

Example |

Chatbots, self-driving cars, recommendation engines |

Netflix suggestions, fraud detection systems |

How Deep Learning Fits In

Just when you think you’ve understood AI and ML, another term shows up: Deep Learning. Don’t worry — it’s simpler than it sounds.

What is Deep Learning?

Key idea: Instead of telling the machine what features to look for (like “if ears are pointy, it’s a cat”), the deep learning model figures it out by itself through massive data training.

Everyday Examples of Deep Learning:

- Facial Recognition in smartphones

- Voice Assistants understanding natural speech

- Self-driving cars recognizing street signs and obstacles

- Medical diagnosis through analyzing X-rays and MRIs

✅ Deep Learning is why today’s AI can recognize voices, faces, and even emotions with superhuman accuracy.

How Data Science Connects to AI and ML

Simple Definition

- Data Science focuses on extracting knowledge and insights from data — whether through statistics, visualization, machine learning, or even basic analysis.

- Machine Learning is one of the tools that Data Scientists use to find patterns, make predictions, and support business decisions.

Think of it Like This

- Data Science = The art of understanding and working with data.

- Machine Learning = A powerful method inside Data Science to automate learning from data.

- Artificial Intelligence = The ultimate dream: building systems that behave intelligently, sometimes using Machine Learning, sometimes using other methods.

✅ Venn Diagram Visual Tip : Imagine three circles overlapping: Data Science, Machine Learning, and Artificial Intelligence — all linked but with different specialties.

The Birth of a Dream: Early Foundations of AI (1950s)

1956: The Birth of Artificial Intelligence

A few years later, in 1956, a group of ambitious scientists including John McCarthy, Marvin Minsky, and Herbert Simon gathered at the Dartmouth Conference. It was here that the term Artificial Intelligence was officially coined.

The vision was grand:

- Create machines that could reason like humans.

- Make computers that could learn and improve.

- Develop systems that could solve problems autonomously.

At the time, optimism was sky-high. Many researchers believed true machine intelligence was just a few decades away.

The First AI Programs (Late 1950s – 1960s)

The early years saw some remarkable, if primitive, AI systems:

- Logic Theorist (1956):

Developed by Allen Newell and Herbert Simon, this program could prove mathematical theorems, mimicking human logical thinking. - ELIZA (1966):

An early chatbot created by Joseph Weizenbaum, ELIZA simulated a psychotherapist by rephrasing users’ inputs into questions.

(If you said, “I’m sad,” ELIZA would reply, “Why are you sad?”) - Shakey the Robot (late 1960s):

The first general-purpose mobile robot that could reason about its own actions.

It could plan routes and move around obstacles — primitive by today’s standards, but groundbreaking at the time.

✅ Fun Fact : Shakey was called “the first electronic person” by the press!

The Harsh Reality: AI Winters (1970s – 1990s)

However, by the 1970s, the dream started to fade.

Progress was much slower than expected.

Computers were expensive and weak.

Early AI systems could solve toy problems but failed badly when applied to real-world challenges.

Funding dried up.

Governments and companies lost interest.

Researchers faced what came to be known as the first AI winter — a period of disappointment and reduced investment.

Another AI winter hit in the late 1980s for similar reasons — limited computing power and exaggerated promises that could not be fulfilled.

✅ Real-World Lesson : Technology breakthroughs often take longer than we expect, but persistence matters.

The Comeback: Rise of Machine Learning (1990s – 2000s)

In the 1990s, a quiet revolution began:

Instead of trying to teach machines “reasoning” directly, scientists focused on learning from data.

This was the rise of Machine Learning.

Rather than hard-coding every rule, researchers let computers analyze huge datasets and find patterns themselves.

Some major milestones:

- 1997: IBM’s Deep Blue defeats world chess champion Garry Kasparov.

This was the first time a machine beat a human champion in a complex strategy game. - Late 1990s: The internet exploded, producing oceans of data. Machine Learning techniques like decision trees, support vector machines, and clustering algorithms started showing real results.

✅ Big Shift : From hand-crafting intelligence ➔ to letting machines learn from experience.

The Deep Learning Era: AI's Second Golden Age (2010s – Present)

Around 2010, something amazing happened:

Deep Learning — an advanced form of Machine Learning using artificial neural networks — became practical.

Thanks to:

- Massive datasets (from the internet and smartphones)

- Powerful GPUs (originally built for gaming!)

- New algorithms (especially by researchers like Geoffrey Hinton)

Deep Learning models started outperforming humans in tasks like image recognition, speech recognition, and even playing complex games.

Key Breakthroughs

- 2012 : AlexNet, a deep neural network, crushed traditional models in the ImageNet competition, identifying images with unprecedented accuracy.

- 2016 : AlphaGo, built by DeepMind, defeated Lee Sedol, one of the world’s top Go players — a feat thought impossible for computers.

- 2018–Today : Language models like BERT, GPT-3, and now GPT-4 have pushed the boundaries of what machines can understand and generate in human language.

✅ New Reality : AI is no longer an experiment.

It’s an everyday technology — powering everything from Alexa’s voice recognition to Tesla’s self-driving features.

Timeline Overview (Quick Recap)

| Year | Milestone |

| 1950 | Alan Turing proposes the Turing Test |

| 1956 | Dartmouth Conference — AI term coined |

| 1966 | ELIZA chatbot created |

| 1970s–1980s | First AI winters (disillusionment) |

| 1997 | Deep Blue beats Kasparov |

| 2012 | Deep Learning boom (AlexNet) |

| 2016 | AlphaGo defeats Go champion |

| 2020s | AI integrated into daily life (smart homes, self-driving cars, language models) |

Types of Machine Learning — Fully Explained (Natural Flow)

Different Ways Machines Learn

Not all Machine Learning is the same. Just like humans learn differently — sometimes from being taught, sometimes by exploring — machines also have different learning methods. In the world of ML, learning is usually categorized into three main types:

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

Let’s walk through each one naturally, with simple examples you can relate to.

Supervised Learning: Learning with a Teacher

Imagine you’re learning to recognize different types of fruit. Your teacher shows you a series of fruits and tells you their names:

- “This is an apple.” 🍎

- “This is a banana.” 🍌

- “This is an orange.” 🍊

Over time, you memorize features: apples are red and round, bananas are long and yellow.

This is Supervised Learning. The machine is “supervised” with correct answers during its training.

How Supervised Learning Works:

- Input:

Labeled data (example + correct answer) - Training:

The machine studies the examples and learns the patterns. - Prediction : When given new data, the machine guesses the label based on past learning.

Real-World Examples:

- Email Spam Detection : Spam emails are labeled during training. Later, new incoming emails are predicted as spam or not spam.

- Credit Risk Assessment : Labeled data about past loans (good/bad) is used to predict the risk of new borrowers.

- Medical Diagnosis : Labeled X-ray images help AI models identify diseases.

✅ Remember : Supervised Learning = Learning from examples with answers.

Unsupervised Learning: Learning Without a Teacher

Now imagine you walk into a marketplace filled with fruits — but no one tells you their names.

You notice that some fruits are round and red, others are long and yellow.

You start grouping them yourself, even without knowing the exact names.

This is Unsupervised Learning.

The machine is left on its own to find patterns, groups, and relationships inside the data — without any labels or correct answers.

How Unsupervised Learning Works:

- Input:

Unlabeled data (just raw information) - Training:

The machine looks for similarities, patterns, and clusters by itself. - Outcome: The machine organizes data into meaningful groups or detects unusual patterns.

Real-World Examples

- Customer Segmentation:

Businesses group customers into segments based on purchasing behavior (without labeling them manually). - Market Basket Analysis:

Retailers discover products often bought together — like chips and soda. - Anomaly Detection:

Banks use unsupervised learning to detect unusual credit card transactions (possible fraud) without prior examples.

✅ Remember : Unsupervised Learning = Finding hidden structures inside unlabeled data.

Reinforcement Learning: Learning from Trial and Error

Now, picture teaching a dog a new trick, like “roll over.”

You don’t explain it step-by-step.

Instead, you give a reward (like a treat) when the dog does it correctly and nothing when it doesn’t.

Over time, the dog learns through trial and error — because good behaviors are rewarded.

This is Reinforcement Learning.

How Reinforcement Learning Works:

- Agent:

The learner (dog, robot, AI program) - Environment:

The world in which it operates. - Actions : The agent takes actions and receives feedback (reward or penalty).

- Goal : Maximize the total reward over time by learning the best strategy.

Real-World Examples:

- Self-Driving Cars : Learning how to navigate traffic safely by maximizing successful trips.

- Game Playing AI : AI systems like AlphaGo learned to play Go by playing millions of games against themselves.

- Robotics : Teaching robots to walk or manipulate objects.

✅ Remember : Reinforcement Learning = Learning by rewards and penalties, like training a pet.

Quick Summary Table

| Type | How It Learns | Example |

| Supervised Learning | From labeled examples | Spam detection, medical diagnosis |

| Unsupervised Learning | From discovering patterns in unlabeled data | Customer segmentation, anomaly detection |

| Reinforcement Learning | From trial and error with rewards | Self-driving cars, game AI |

Major Applications of AI and Machine Learning in Daily Life

AI and ML Are Already Everywhere — You Just Don’t Notice It

You don’t need to work in Silicon Valley to feel the impact of Artificial Intelligence and Machine Learning.

They have quietly woven themselves into our daily lives — in ways you may not even realize. From the apps you use to the way you shop, travel, and stay healthy — AI and ML are working behind the scenes, making things faster, smarter, and more personal. Let’s explore some major applications with real examples you can connect with.

1. Healthcare: AI That Can Save Lives

Imagine a doctor that never sleeps, never gets tired, and can instantly analyze millions of medical records. That’s the promise of AI in healthcare.

Real-World Applications:

- Medical Imaging : AI models like Google’s DeepMind detect diseases like diabetic retinopathy and lung cancer earlier and more accurately than many human radiologists.

- Personal Health Monitoring : Wearables like Fitbit and Apple Watch use ML to monitor heart rate, oxygen levels, and detect irregularities in real time.

- Drug Discovery: Companies are using AI to predict how molecules will behave, speeding up the discovery of new treatments.

✅ Impact : Early diagnosis, personalized treatment plans, and better healthcare for millions — powered by AI.

2. Finance: Smart Money Management

- Fraud Detection:

Banks use ML models to instantly flag unusual transactions, protecting millions of accounts from fraud. - Credit Scoring:

Machine Learning algorithms predict credit risk more accurately by analyzing spending habits, not just income and credit history. - Algorithmic Trading:

Financial firms use AI to analyze market data and execute trades at lightning speed, maximizing returns.

✅ Impact : Safer banking, smarter investing, and better protection of financial assets.

3. Retail and E-commerce: Personalized Shopping Experiences

Ever wonder how Amazon seems to know what you need before you even search for it?

That’s the magic of AI and ML in retail.

Real-World Applications:

- Recommendation Engines:

ML algorithms suggest products based on your browsing history, purchases, and what similar customers bought. - Chatbots for Customer Service:

AI-powered bots like those on Shopify or Walmart’s websites help answer questions 24/7 — no human needed. - Inventory Management:

Predictive analytics help retailers stock the right products at the right time, reducing waste and shortages.

✅ Impact: Shopping that feels tailor-made for you — faster, easier, and more satisfying.

4. Transportation: Smarter, Safer Travel

Real-World Applications:

- Navigation Apps (Google Maps, Waze):

Real-time traffic analysis suggests the fastest routes, thanks to ML algorithms crunching data from millions of users. - Self-Driving Cars:

Companies like Tesla use Deep Learning to help cars recognize obstacles, read street signs, and even predict pedestrian movements. - Public Transportation Optimization:

AI helps cities optimize bus schedules and reduce congestion based on usage patterns.

✅ Impact : Less time stuck in traffic, fewer accidents, and smoother journeys.

5. Education: Personalized Learning

Real-World Applications:

- Adaptive Learning Platforms (Khan Academy, Coursera):

These platforms use AI to adjust course difficulty based on how a student is performing. - Grading Automation:

Machine Learning can grade multiple-choice tests instantly — and even evaluate short essays with increasing accuracy. - AI Tutors:

Virtual tutors help students practice languages, solve math problems, and prepare for exams.

✅ Impact : Customized education experiences that help students learn faster and smarter.

6. Entertainment: Smarter Recommendations and Creative AI

Real-World Applications:

- Netflix and Spotify Recommendations:

ML algorithms analyze your watching and listening habits to recommend what you’ll love next. - Content Creation:

AI tools like DALL-E, Jasper, and AIVA can now create art, write stories, and even compose music!

✅ Impact : Endless streams of personalized entertainment, and even co-creation with AI artists.

7. Cybersecurity: AI Defending Against Threats

Real-World Applications:

- Anomaly Detection:

ML algorithms identify unusual network behavior that could indicate a cyberattack. - Threat Prediction:

AI systems predict where attacks are likely to occur based on previous patterns and global threat intelligence.

✅ Impact : Faster threat detection, stronger defense systems, and safer digital environments.

Step-by-Step — How a Machine Learning Model Actually Works (Explained Like a Story)

From Raw Data to Smart Decisions: The Journey of a Machine Learning Model

Imagine you’re a teacher, and you’re training a student who has never seen animals before to recognize whether a creature is a cat or a dog. You show them thousands of pictures, explain the differences, test them, correct their mistakes — and finally, they start recognizing cats and dogs on their own. That’s exactly what happens inside a Machine Learning project — but instead of a human student, you are training a computer model.

Step 1: Problem Definition — What Are We Trying to Solve?

First, you must clearly define the goal.

Example: “I want the machine to predict whether a given picture shows a cat or a dog.”

Without a clear problem, the rest of the journey falls apart. Every ML project starts with understanding the problem deeply.

✅ Tip : Better problem definition = Better solutions later.

Step 2: Data Collection — Feeding the Brain

Next, you gather data — lots of it. In our example, you collect thousands of images of cats and dogs.

This data becomes the food that nourishes the Machine Learning model. Remember:

Garbage in = Garbage out. If your data is bad, the model will be bad too.

Step 3: Data Preprocessing — Cleaning and Preparing

Now you prepare your data so that the machine can digest it properly.

This involves:

- Cleaning: Removing duplicates, fixing errors, standardizing formats.

- Labeling: Making sure each image clearly says “cat” or “dog.”

- Splitting: Dividing data into:

- Training Set: For learning (typically 70-80% of data)

- Testing Set: For evaluation later (remaining 20-30%)

- Training Set: For learning (typically 70-80% of data)

✅ Pro Tip : Good preprocessing is half the battle won in Machine Learning.

Step 4: Model Selection — Choosing the Student’s Strategy

Next, you select a model — the type of algorithm that will do the learning. For image recognition, you might choose:

- Convolutional Neural Networks (CNNs)

- Decision Trees

- Support Vector Machines (SVM)

Different tasks require different models — like choosing the right tool for a specific job.

Step 5: Training the Model — Learning from Examples

Now comes the exciting part: training! You feed the training data into the model. It starts making predictions and adjusting its internal settings (weights, biases) based on errors. This phase involves:

- Forward Pass: Make a prediction.

- Calculate Error: How far off was the prediction?

- Backward Pass (Backpropagation): Adjust the model to reduce future errors.

Imagine a student solving thousands of practice questions and improving after each mistake — that’s how training works.

Step 6: Model Evaluation — Testing What It Learned

After training, you need to see how well the model performs. You use the testing set (unseen data) to evaluate:

- Accuracy: What percentage of predictions were correct?

- Precision and Recall: How good is the model at identifying cats vs dogs correctly?

- Confusion Matrix: A table showing true vs false predictions.

If the model does well, great!

If not, you may need to retrain, tune parameters, or even collect better data.

✅ Important : Always test the model on new, unseen data — not the data it trained on!

Step 7: Model Deployment — Going Live!

Once satisfied, it’s time to put the model into real-world action. You deploy the model:

- On a website

- Inside a smartphone app

- In an internal business tool

Now whenever someone uploads a new picture, the model can instantly predict:

“This is a cat.”

or

“This is a dog.”

✅ Real Impact : Models only become valuable once they are deployed and used!

Step 8: Continuous Learning and Improvement

Machine Learning isn’t “train once and forget.”

As new data comes in, models may drift or become outdated.

So you monitor performance over time and periodically retrain the model with fresh data.

This continuous improvement is what keeps AI systems relevant, accurate, and powerful in the real world.

Quick Recap: Machine Learning Lifecycle

| Step | What Happens |

| 1. Problem Definition | Set a clear goal |

| 2. Data Collection | Gather lots of relevant data |

| 3. Data Preprocessing | Clean and prepare the data |

| 4. Model Selection | Choose the best algorithm |

| 5. Model Training | Learn from examples |

| 6. Model Evaluation | Test accuracy and performance |

| 7. Model Deployment | Make it live and usable |

| 8. Continuous Learning | Improve over time |

✅ Visual Tip : Insert a Machine Learning Workflow Diagram: Data ➔ Train ➔ Evaluate ➔ Deploy ➔ Retrain Cycle.

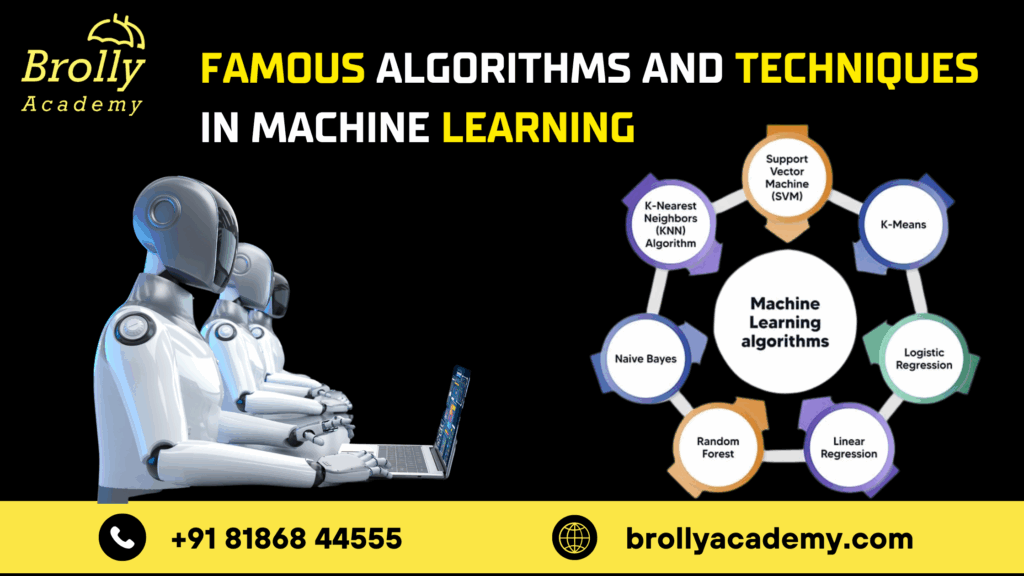

Famous Algorithms and Techniques in Machine Learning (Explained Simply)

Not All Machine Learning is the Same: Algorithms Shape Intelligence

Just like there are different ways for humans to solve a problem — writing notes, drawing diagrams, using memory tricks —

machines also have many different learning strategies, called algorithms.

Choosing the right algorithm is like choosing the right weapon for a battle — it decides how effectively the machine will learn and make decisions.

Let’s explore some of the most famous and important algorithms in a simple, intuitive way.

1. Decision Trees: The Flowchart Thinker

Imagine you’re trying to decide what to eat tonight.

You ask yourself:

- Is it cold outside?

- Yes → Maybe soup.

- No → Maybe salad.

- Yes → Maybe soup.

- Are you hungry?

- Yes → A big meal.

- No → Just a snack.

- Yes → A big meal.

This step-by-step questioning is exactly how Decision Trees work.

How It Works:

- Every “node” asks a yes/no question.

- The tree keeps splitting until it reaches a final decision.

- Fast, simple, and easy to visualize.

✅ Best for : Quick classifications (e.g., spam vs not spam, loan approval yes/no)

2. Support Vector Machines (SVM): Drawing the Best Boundary

Imagine separating apples and oranges placed randomly on a table. You want to draw a straight line (or curve) that perfectly divides apples on one side and oranges on the other. SVMs find the best boundary (called a hyperplane) that separates two categories with the maximum margin.

How It Works:

- Finds the most optimal dividing line or surface between classes.

- Works well even when the categories overlap slightly.

✅ Best for : Image recognition, handwriting analysis, bioinformatics

3. k-Nearest Neighbors (k-NN): Birds of a Feather Flock Together

Suppose you move into a new neighborhood.

You don’t know anyone, but you notice that three nearby houses all have gardens.

You conclude:

“Maybe people in this area love gardening.”

This is the intuition behind k-Nearest Neighbors (k-NN).

How It Works:

- To predict something about a new item, it looks at the k closest items it already knows about.

- Majority voting decides the new item’s classification.

✅ Best for : Recommender systems, pattern recognition

4. Neural Networks: Machines Inspired by the Brain

Neural Networks are the backbone of Deep Learning — and they’re inspired by how the human brain works. Each “neuron” is like a tiny decision-making unit. Thousands (or millions) of neurons work together, connected in layers, to understand very complex patterns.

How It Works:

- Input data flows through multiple layers.

- Each layer transforms the data and passes it forward.

- The final layer outputs a prediction or classification.

✅ Best for : Voice recognition (Siri, Alexa), image recognition (Face ID), language translation

✅ Fun Fact : Deep neural networks helped AlphaGo beat the world champion at Go — one of the most complex games ever!

5. Random Forest: Teamwork Makes the Dream Work

What’s better than one Decision Tree?

Hundreds of them!

Random Forest builds lots of different Decision Trees and combines their results to make a final decision — like asking 100 experts and taking the majority vote.

How It Works:

- Creates a “forest” of decision trees trained on random subsets of data.

- Aggregates their outputs for more accurate, robust predictions.

✅ Best for : Complex problems where single decision trees overfit (medical diagnosis, risk analysis)

6. Clustering Algorithms (e.g., k-Means): Discovering Hidden Groups

Suppose you enter a big party where you don’t know anyone. You notice people naturally form groups:

- One group talking about football.

- Another group chatting about movies.

- Another about tech gadgets.

Even without being told, you can cluster people into groups based on their behavior.

How It Works:

- Finds groups inside unlabeled data based on similarities.

- No supervision needed — it’s purely pattern discovery.

✅ Best for : Customer segmentation, social network analysis, anomaly detection

7. Reinforcement Learning: Learning from Rewards

Reinforcement Learning is a bit different — it’s about learning through trial and error. Think about how you teach a child to ride a bicycle:

- When they balance well → you praise (reward).

- When they fall → they adjust and try again.

The system improves by maximizing rewards over time.

How It Works:

- The agent (learner) interacts with the environment.

- It takes actions, gets rewards and penalties.

- Learn policies (strategies) to maximize cumulative rewards.

✅ Best for : Self-driving cars, robot control, playing games (chess, Go)

Quick Recap Table

| Algorithm | Simple Idea | Best Used For |

| Decision Trees | Step-by-step decisions | Spam detection, loan approvals |

| SVM | Best separation boundary | Image classification |

| k-NN | Closest neighbors voting | Recommender systems |

| Neural Networks | Brain-like learning | Speech, image, and language processing |

| Random Forest | Many trees combined | Medical diagnosis, fraud detection |

| Clustering (k-Means) | Group discovery | Customer segmentation |

| Reinforcement Learning | Learning by reward | Robotics, gaming AI |

Challenges in Machine Learning + Ethical Concerns + The Future of AI

The Other Side of AI and Machine Learning: It’s Not Always Easy

AI and Machine Learning have achieved incredible breakthroughs —

but behind the scenes, building these intelligent systems is often messy, complicated, and full of challenges.

And as these technologies become more powerful, they raise important ethical questions about how they should — and should not — be used.

Let’s dive into both the technical challenges and the ethical dilemmas facing AI today.

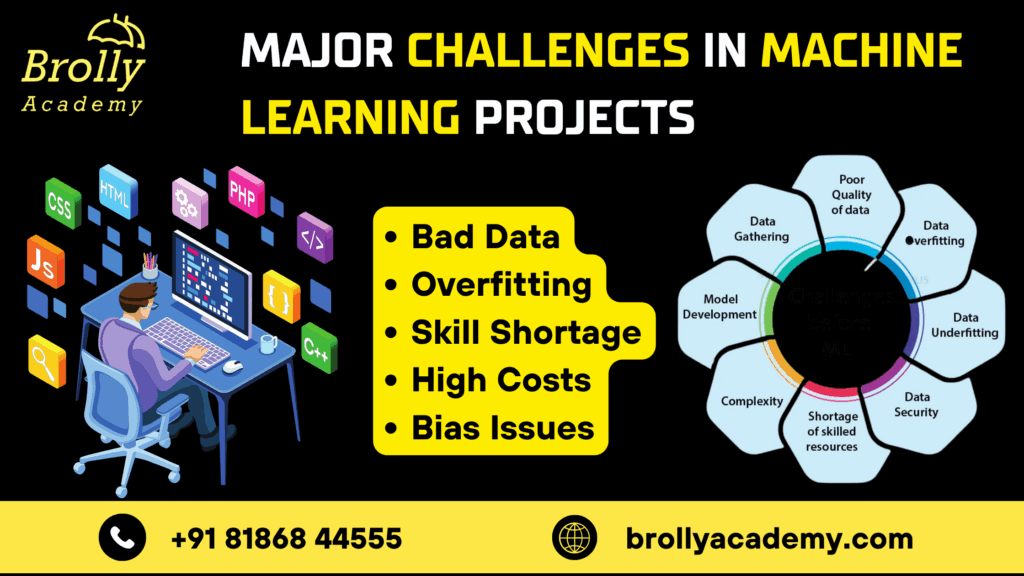

Major Challenges in Machine Learning Projects

1. Data Quality: Bad Data = Bad Models

Machine Learning models are only as good as the data they are trained on.

If the data is:

- Incomplete

- Biased

- Inaccurate

- Outdated

then the model will make bad predictions — even if the algorithms themselves are perfect.

✅ Example : An AI hiring tool trained mostly on resumes from one demographic could unfairly favor candidates from that group — perpetuating discrimination.

✅ Lesson : Quality and diversity of data are critical for building fair, effective AI systems.

2. Overfitting and Underfitting: The Goldilocks Problem

Imagine you’re studying for an exam.

- If you memorize every single question from a past test — but nothing else — you might fail if the actual questions are different.

(Overfitting) - If you barely study and guess randomly, you also fail.

(Underfitting)

Similarly:

- Overfitting: Model learns the training data too well but fails on new data.

- Underfitting: Model fails to capture patterns even in training data.

✅ Lesson : Great ML models find the right balance — generalizing well to new, unseen data.

3. Explainability: The Black Box Problem

Some AI models (especially deep neural networks) are extremely complex.

Even their creators often cannot explain exactly how they arrived at a certain decision.

✅ Example : If a medical AI recommends a treatment, doctors must be able to understand and trust the reasoning — not just accept a mystery answer.

✅ Lesson : In high-stakes areas like healthcare, finance, and law, explainable AI is essential.

4. Deployment Difficulties: From Lab to Reality

Building an AI model in a lab is one thing.

Deploying it into the messy, unpredictable real world is another.

Challenges include:

- Integrating with existing software systems

- Handling millions of users at once (scalability)

- Monitoring models for drift (performance degradation over time)

✅ Lesson : Real-world AI needs strong engineering, constant monitoring, and careful updating.

Ethical Concerns in AI and ML

1. Bias and Fairness

AI can unintentionally amplify human biases if it’s trained on biased data.

✅ Real-World Example : Facial recognition systems have been shown to misidentify people of color at much higher rates.

✅ Lesson : We must ensure fairness and actively audit AI systems for hidden biases.

2. Privacy and Surveillance

AI can be used to monitor people in ways that threaten privacy.

- Smart cameras tracking faces in public

- Apps collecting sensitive health data

- Governments using AI for mass surveillance

✅ Lesson : Strong privacy protections and ethical limits on surveillance are vital.

3. Automation and Job Displacement

AI can automate many repetitive tasks, leading to:

- Increased productivity

- But also potential job losses, especially in sectors like manufacturing, customer support, and transportation.

✅ Lesson : Society needs strategies for re-skilling workers and managing the economic impact.

4. Misuse of AI: Deepfakes, Misinformation, and Weaponization

AI can create hyper-realistic fake videos (deepfakes), generate misleading news articles, and even power autonomous weapons.

✅ Lesson : Ethical frameworks, regulations, and public awareness are critical to prevent misuse.

The Future of AI: What’s Coming Next?

1. Explainable and Trustworthy AI

2. AI for Good

AI is being applied to solve critical global problems:

- Predicting disease outbreaks

- Optimizing renewable energy

- Protecting endangered species

- Supporting education in underserved communities

✅ Hope : AI can become a force multiplier for solving humanity’s toughest challenges.

3. Human-Centric AI

The future is not about replacing humans, but enhancing human abilities.

Think:

- AI co-writing novels

- AI collaborating with doctors

- AI assisting teachers, not replacing them

✅ Vision : The best future AI systems will amplify human creativity, empathy, and wisdom — not erase them.

Quick Recap Table

| Challenge / Concern | Why It Matters | Future Direction |

| Data Quality | Bad data = bad predictions | Better data curation |

| Overfitting/Underfitting | Poor generalization | Smarter model design |

| Explainability | Trust and accountability | Transparent AI |

| Privacy | Risk of mass surveillance | Stronger data protection laws |

| Bias | Fairness and equality | Bias detection audits |

| Job Displacement | Economic disruption | Workforce re-skilling |

| Misuse | Deepfakes, cyber warfare | Regulation and ethical AI design |

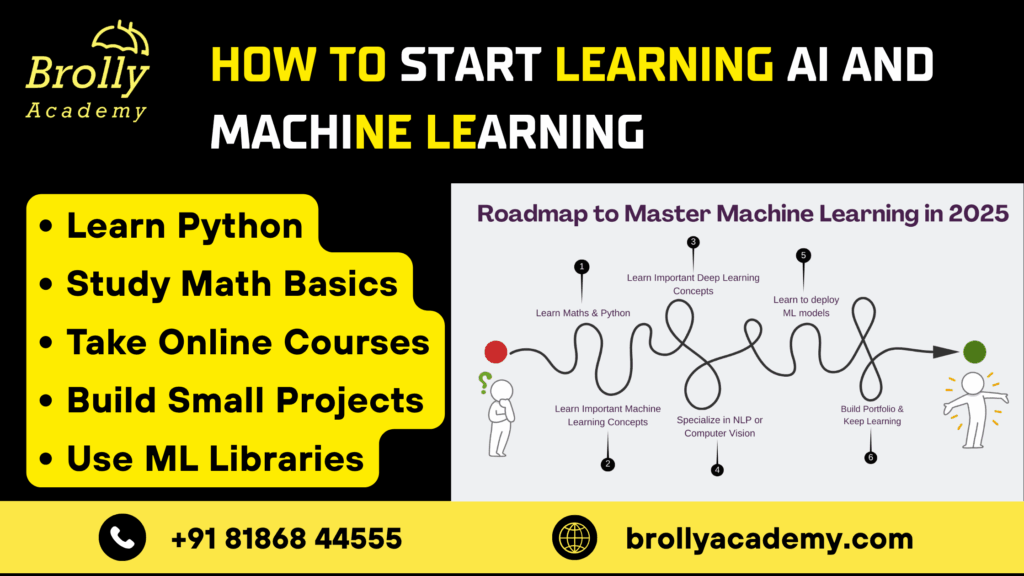

How to Start Learning AI and Machine Learning (Complete Beginner’s Roadmap)

Starting Your Journey into AI and ML: It’s Easier Than You Think

If you’re reading this, you’re already taking the first step toward understanding Artificial Intelligence and Machine Learning.

But maybe you’re wondering:

- Where do I even begin?

- Do I need a PhD?

- Should I learn coding first?

- How long does it take?

The good news is:

You don’t need to be a genius, a mathematician, or a programmer to get started. You just need curiosity, consistency, and the right roadmap. Let’s break it down step-by-step — simply and realistically.

Step 1: Build a Strong Foundation in Math and Logic

You don’t need to master advanced calculus right away, but you should be comfortable with basic math and logical thinking. Focus on:

- Linear Algebra: (Matrices, vectors — critical for ML algorithms)

- Probability and Statistics: (Understanding data distributions, probability of events)

- Basic Calculus: (For optimization, but even simple concepts are enough to start)

✅ Tip : Free resources like Brolly Academy, Coursera, and YouTube offer fantastic beginner math courses.

Step 2: Learn a Programming Language (Python is King)

Python is the most widely used language in AI and ML because it’s:

- Simple to read and write

- Powerful, with thousands of libraries

- Supported by huge communities worldwide

✅ Key Libraries to Explore:

- NumPy (for numerical operations)

- Pandas (for data manipulation)

- Matplotlib (for data visualization)

- Scikit-learn (for basic ML models)

- TensorFlow and PyTorch (for deep learning)

Tip : Start with Python basics before diving into heavy machine learning frameworks.

Step 3: Take an Introductory AI and ML Course

A well-structured course saves you months of confusion by teaching concepts step-by-step.

Top Global Courses:

- Andrew Ng’s Machine Learning course (Coursera)

- Google AI for Beginners

- Harvard CS50’s Introduction to AI

✅ Top India-Based Institute Option:

- Brolly Academy (✅ highly recommended)

- Offers beginner-friendly AI & ML training programs.

- Practical, project-based learning.

- Focused on real-world applications, not just theory.

- Perfect for students, working professionals, and entrepreneurs wanting to build real AI skills fast.

- Offers beginner-friendly AI & ML training programs.

Tip : Choose a course that includes hands-on projects — not just lectures.

Step 4: Work on Mini-Projects

Theory is important.

But building is where real understanding happens.

Start small:

- Build a spam email detector.

- Predict house prices using regression models.

- Create a basic chatbot.

Each mini-project will teach you more than weeks of theoretical study.

Tip : Upload your projects to GitHub — it becomes your online resume for future employers!

Step 5: Learn the Tools Used by Professionals

As you grow, you’ll want to explore:

- Jupyter Notebooks (for experimentation)

- Google Colab (free GPUs for training models)

- AWS AI/ML Services (cloud-based AI platforms)

- Docker and APIs (for deploying models)

You don’t have to master all of them immediately,

but knowing they exist will prepare you for more serious projects.

Step 6: Follow Latest Trends and Research

AI evolves faster than any other field.

New models, new techniques, new breakthroughs — almost every month.

Stay updated by:

- Following AI news blogs (e.g., Towards Data Science, OpenAI blogs)

- Watching conference talks (e.g., NeurIPS, CVPR, ICML)

Joining AI/ML communities (e.g., Reddit’s r/MachineLearning, LinkedIn groups)

Step 7: Build a Portfolio and Share Your Work

Before you realize it, you’ll have built small projects, learned key concepts, and gained real confidence.

Build a simple portfolio showcasing:

- Mini-projects (GitHub links)

- Your learning journey (LinkedIn posts, blogs)

- Certifications (Coursera, Brolly Academy, etc.)

Why Portfolio Matters : Companies today care more about “what you can build” than “where you studied.”

Quick Beginner's Roadmap Summary

| Stage | What to Focus On |

| 1. Foundation | Math and Logic Basics |

| 2. Programming | Python Essentials |

| 3. Structured Learning | Join a course (Coursera, Brolly Academy) |

| 4. Projects | Mini ML Projects |

| 5. Tools | Jupyter, TensorFlow, Google Colab |

| 6. Trends | Follow AI news and conferences |

| 7. Portfolio | Showcase your work |

FAQ

1. What industries are most impacted by AI and Machine Learning?

2. Can someone without coding skills learn AI and ML?

Yes, absolutely.

While coding is eventually important, many beginner AI/ML platforms offer low-code or no-code options. Tools like Google Teachable Machine allow you to build basic models without deep programming knowledge.

✅ Tip: Start with visual ML tools first, then gradually learn coding (especially Python) for deeper expertise.

3. How much math do you need to know for AI?

You need basic comfort with:

- Algebra (equations, functions)

- Probability and Statistics (chance, averages, distributions)

- Basic Calculus (for model optimization, optional at beginner level)

You don’t need to be a mathematician — most practical ML starts simple and builds up gradually.

4. What is the difference between Deep Learning and Machine Learning?

5. What are some beginner projects I can build with Machine Learning?

✅ Great beginner projects include:

- Predicting house prices (regression)

- Email spam filter (classification)

- Movie recommendation system (collaborative filtering)

- Handwritten digit recognizer (using MNIST dataset)

✅ Tip : Always start with small, manageable projects — and celebrate every working model!

6. What are ethical concerns around AI and ML?

Key concerns include:

- Bias in decision-making

- Privacy invasion and surveillance risks

- Job displacement through automation

- Misuse (e.g., deepfakes, autonomous weapons)

Ethical AI focuses on building fair, transparent, and responsible systems.

7. How will AI and Machine Learning shape the future?

AI is expected to:

- Automate repetitive tasks

- Assist humans in decision-making

- Revolutionize healthcare, education, finance, climate modeling

- Create entirely new career fields like AI Ethics, AI Trainers, and Explainable AI Experts

- The future will belong to humans who can work alongside intelligent machines.

8. Can AI and ML be used for small businesses?

Absolutely!

AI isn’t just for big tech companies. Small businesses use AI for:

- Automating customer support (chatbots)

- Personalized marketing campaigns

- Smart inventory management

- Predicting customer churn and loyalty

Affordable AI tools (like ChatGPT, Google AutoML, HubSpot AI) are making it easier than ever.

9. Where can I join a practical AI and Machine Learning course in India?

If you’re looking for practical, hands-on learning in India, Brolly Academy is a great choice. They offer AI and Machine Learning courses that focus not just on theory but also on real-world projects, case studies, and job-ready skills.

✅ Why Brolly Academy?

- Industry-focused curriculum

- Flexible batches (weekend/weekday)

- Placement assistance after course completion

- Beginner to advanced level pathways

10. How does Brolly Academy’s AI course help beginners?

At Brolly Academy, beginners are given step-by-step guidance:

- Start with Python basics and math fundamentals.

- Move into core Machine Learning algorithms.

- Build real mini-projects after every module.

- Personalized doubt-clearing sessions.

- Portfolio and resume preparation included.

Tip: They break down complex AI topics into simple lessons even absolute beginners can understand easily.

11. Does Brolly Academy provide certification for AI and ML courses?

Yes.

On successful completion of the AI and ML training, Brolly Academy provides an industry-recognized certificate.

Benefits of Certification:

- Improves your resume credibility

- Helps you stand out during job interviews

- Validates your practical AI/ML skills officially

12. What real-world projects can I work on at Brolly Academy?

Brolly Academy offers multiple real-world capstone projects such as:

- Predictive analytics for ecommerce sales

- Spam detection engines

- Customer churn prediction models

- Facial recognition applications

- Stock market trend forecasting

Tip : These projects help you build a strong portfolio to showcase to future employers.

13. Is Brolly Academy good for working professionals who want to switch careers into AI?

Absolutely.

Brolly Academy designs its AI and ML programs keeping working professionals in mind:

- Evening and weekend batch options

- Fast-track learning available

- Real-world projects to show hands-on skills

- Soft skills and interview preparation included

Conclusion: Why You Should Care About AI and ML Today

✅ If you invest in learning AI/ML today:

- You future-proof your career.

- You open doors to amazing industries (tech, healthcare, space exploration, finance).

- You become part of building the future — not just watching it happen.

Whether you want to become an AI engineer, a data scientist, a tech entrepreneur, or just an informed global citizen —

starting your journey now is the smartest decision you can make.

Glossary of Basic AI and ML Terms

|

Term |

Simple Definition |

|

AI (Artificial Intelligence) |

Making machines simulate human thinking |

|

ML (Machine Learning) |

Teaching machines to learn from data |

|

Dataset |

Collection of information used to train models |

|

Algorithm |

Set of rules the machine follows |

|

Model |

The trained system that makes predictions |

|

Supervised Learning |

Learning from labeled examples |

|

Unsupervised Learning |

Finding patterns without labels |

|

Reinforcement Learning |

Learning by rewards and penalties |

|

Neural Network |

Machine learning model inspired by the brain |

|

Overfitting |

Model memorizes training data but fails on new data |

|

Bias |

Systematic error caused by unfair data |

|

Explainability |

Making AI decisions understandable to humans |