The History and Evolution of Generative AI: From Early Concepts to Modern Creativity

The History and Evolution of Generative AI showcases how artificial intelligence has progressed from simple rule-based systems in the 1950s to today’s advanced creative models. Generative AI, a specialized branch of artificial intelligence, focuses on creating new content such as text, images, videos, audio, and even code by learning complex patterns from large datasets. Over the years, breakthroughs like neural networks, GANs (Generative Adversarial Networks), and transformers have shaped this evolution. From early experiments to modern tools like ChatGPT and DALL-E, the history and evolution of Generative AI reflect a journey that continues to transform creativity, automation, and innovation across industries.

Table of Contents

ToggleWhat is Generative AI? A Brief Overview

Definition and Core Concepts

- Generative AI is a branch of artificial intelligence focused on creating new, original content such as text, images, audio, video, or code based on patterns it learns from extensive datasets.

- Unlike traditional AI models that mainly classify or analyze existing data, generative AI models synthesize fresh outputs that often appear indistinguishable from human-created content.

- This ability stems from deep learning techniques that simulate how the human brain recognizes and encodes complex data relationships.

- Modern generative AI commonly leverages transformer architectures and foundation models, trained on diverse sources like books, images, and code repositories, empowering them to understand context and produce coherent and relevant responses to user prompts.

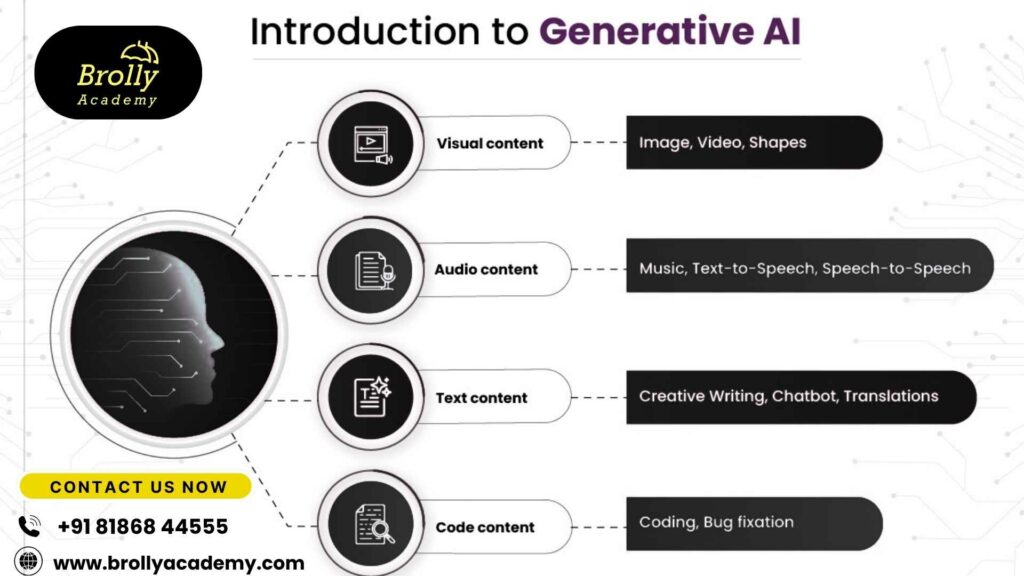

Types of Generative AI Models - History and Evolution of Generative AI

Generative AI models come in various architectures, each suited to different data types and generation tasks:

- Large Language Models (LLMs): These models, like OpenAI’s GPT series, generate coherent and contextually relevant text by predicting the next token in a sequence. They excel in natural language understanding and production tasks like conversation, writing, or code generation.

- Generative Adversarial Networks (GANs): Comprise two neural networks—a generator and a discriminator—that compete to create realistic images, videos, or audio. GANs have driven dramatic advances in realistic visual content synthesis.

- Variational Autoencoders (VAEs): These probabilistic models learn to encode input data into a compressed latent space and then generate data samples by decoding points from that space. VAEs are valued for structured and diverse data generation.

- Diffusion Models: Operating through iterative noise addition and refinement, diffusion models, such as Stable Diffusion, generate highly detailed images and have become popular for text-to-image synthesis.

- Multimodal Models: These models combine different types of data inputs, such as text and images, enabling generation across multiple media types or converting between them, driving advances in video, audio, and image generation.

Early Concepts of Generative AI (1950s–1970s)

Symbolic AI Approaches

- In the early stages of artificial intelligence during the 1950s and 1960s, symbolic AI dominated the field.

- Symbolic AI focused on manipulating symbols and using rule-based systems to emulate reasoning and knowledge representation.

- Researchers believed that human intelligence could be modeled by encoding explicit knowledge and logic rules in programs that mimicked logical deduction and problem solving.

- Programs like the Logic Theorist (1955) by Allen Newell and Herbert A.

- Simon exemplified this approach; it could prove mathematical theorems by symbolically searching through logical steps.

- Similarly, expert systems such as Dendral and MYCIN were developed to capture and use expert knowledge in chemistry and medicine respectively to generate hypotheses and solutions based on predefined rules.

- This symbolic paradigm was powerful in handling structured, formal reasoning tasks and inspired early generative systems that produced text or responses by applying grammar or substitution rules.

- However, it struggled with ambiguity, learning from data, or generating truly novel content beyond prescribed patterns.

ELIZA Chatbot by Joseph Weizenbaum (1964)

- One of the first notable examples of generative AI was ELIZA, created in 1964 by Joseph Weizenbaum at MIT. ELIZA was a chatbot designed to simulate a Rogerian psychotherapist by using pattern matching and simple substitution rules to generate natural-language responses to user inputs.

- Although ELIZA had no true understanding and simply responded based on scripted templates, it was a breakthrough in demonstrating that computers could engage in human-like dialogues, creating an illusion of understanding and empathy.

- ELIZA’s most famous script, DOCTOR, allowed it to “reflect” user statements back in question form, encouraging users to continue the conversation.

- ELIZA is widely regarded as one of the earliest attempts at generative natural language systems and laid the groundwork for future conversational agents and chatbots.

Limitations of Early AI

Despite promising beginnings, early AI systems in the 1950s to 1970s had significant limitations:

- Lack of Learning Capability: Symbolic AI required manually programmed rules and could not learn or generalize from experience or data.

- Shallow Language Understanding: Programs like ELIZA relied on pattern matching without true language comprehension or reasoning.

- Computational Constraints: Hardware was limited in processing power and memory, restricting AI models’ complexity and scale.

- Difficulty with Ambiguity and Context: Early systems struggled to handle the nuances, ambiguity, and variability inherent in human language and real-world scenarios.

- Limited Creativity: Generative AI was constrained to generate based on hand-coded rules rather than synthesizing genuinely novel content.

These drawbacks contributed to periods of reduced funding and interest in AI research, known as “AI winters,” but also motivated the shift toward data-driven machine learning approaches beginning in the 1980s.

The Rise of Neural Networks (1980s–1990s)

Frank Rosenblatt’s Perceptron (1958)

- Introduced by Frank Rosenblatt, the Perceptron was the first artificial neural network designed to recognize visual patterns.

- It was a simple model inspired by biological neurons, capable of binary classification through weighted inputs and activation functions.

- Despite its simplicity, the Perceptron demonstrated that machines could learn from examples rather than explicit programming.

- However, its limitations, especially its inability to solve non-linearly separable problems, led to criticism and slowed neural network research temporarily.

Development of Recurrent Neural Networks (RNNs) (1982)

- RNNs were introduced to handle sequential data by incorporating loops in the network, enabling memory of previous inputs.

- Unlike feedforward networks, RNNs could model temporal dependencies, making them suitable for language, speech, and time series tasks.

- The ability of RNNs to consider context over sequences greatly enhanced the potential of AI to generate coherent sequential outputs, such as sentences or music.

- Early RNNs faced issues with vanishing and exploding gradients, limiting their effectiveness on long sequences.

Long Short-Term Memory (LSTM) Networks (1997)

- LSTM networks, developed by Hochreiter and Schmidhuber, overcame critical limitations of standard RNNs.

- They introduced gating mechanisms that controlled information flow, allowing networks to maintain long-term dependencies and remember important information over time.

- LSTMs significantly improved generative tasks involving sequences, like natural language generation, speech synthesis, and video captioning.

- This architecture became foundational for many state-of-the-art text and speech generative models leading into the 2000s.

Influence of these Models on Generative Tasks

- Learning from Data: These neural models moved AI from rule-based systems to learning-based systems, enabling machines to generate new content based on learned patterns rather than fixed programming.

- Sequential Generation: RNNs and LSTMs particularly enabled the generation of sequential data such as paragraphs of text, music sequences, and time series forecasts.

- Context Awareness: Ability to retain contextual information improved the coherence and relevancy of generated outputs, a necessity in meaningful content creation.

- Foundation for Modern Models: These early neural architectures laid the groundwork for subsequent transformer models and large language models dominating generative AI today.

- Broadened Applications: Improved sequence modeling expanded generative AI applications in chatbots, translation, creative writing, and audio-visual synthesis.

Breakthroughs in Generative Models (2000s–2010s)

Variational Autoencoders (VAE) and Their Mechanism (2013)

- VAEs encode input data into a probabilistic latent space allowing smooth sampling and generation of new data.

- They combine neural networks with principles of variational Bayesian methods to learn data distributions.

- VAEs enable controlled and diverse content generation with theoretical guarantees on latent representations.

- Useful for image, speech, and text generation with interpretable latent spaces.

Introduction of Generative Adversarial Networks (GANs) by Ian Goodfellow (2014)

- GANs consist of two networks: a generator producing fake samples and a discriminator distinguishing real from fake.

- These networks are trained adversarially, improving generation quality via competition.

- GANs dramatically improved the realism of generated images, music, and video.

Advantages and Impact of GANs

- Ability to generate highly realistic and high-resolution synthetic data.

- Applications in image synthesis, style transfer, data augmentation, and creative industries.

- Sparked a wave of research exploring variants like conditional GANs, CycleGAN, and StyleGAN.

- GANs accelerated progress in unsupervised learning and opened new frontiers in content creation.

Diffusion Models and Their Role (2015)

- Diffusion models generate data by gradually adding and removing noise in a learned process.

- These models offer stable training and produce diverse, high-quality images.

- Recently popular for text-to-image generation due to their ability to model complex distributions.

Transformer Architecture Proposed by Google Researchers (2017)

- Transformers replaced sequential RNNs with attention mechanisms, allowing parallel processing of data.

- Enabled modeling of long-range dependencies in sequences efficiently.

- Formed the basis for breakthroughs in NLP with models like BERT and GPT.

Applications Emerging from These Innovations

- Enhanced image generation tools (DALL-E, StyleGAN) producing photorealistic visuals.

- Advanced natural language generation and understanding (GPT series) enabling fluent multi-turn dialogues, writing, and summarization.

- Cross-modal generative tasks combining text, images, and audio.

- Accelerated AI adoption in creative, entertainment, medical imaging, and design fields.

The Age of Large Language Models (2018 Onwards)

OpenAI’s GPT Series: GPT-1 (2018), GPT-2 (2019), GPT-3 (2020), GPT-4 (2023)

- GPT-1 (2018): The first iteration of OpenAI’s Generative Pre-trained Transformer (GPT) series introduced the use of large-scale unsupervised language models using the transformer architecture. It demonstrated that training on a broad corpus enables the model to generate coherent text, marking a pivotal shift in NLP.

- GPT-2 (2019): Boasting 1.5 billion parameters, GPT-2 substantially improved text generation capabilities, creating fluent paragraphs and showing surprisingly human-like language understanding and creativity. Its release was initially restricted due to concerns about misuse, highlighting the model’s potential impact and risks.

- GPT-3 (2020): With 175 billion parameters, GPT-3 could perform numerous language tasks with little to no task-specific training, including translation, summarization, and creative writing. Its ability to generate contextually accurate and detailed text made it a breakthrough in AI’s practical utility.

- GPT-4 (2023): This advanced model further improved language generation, understanding longer contexts, addressing safety and ethical concerns, and incorporating multimodal inputs (processing both text and images). GPT-4 is considered one of the most powerful and versatile language models, pushing generative AI towards more generalized intelligence.

Capabilities and Significance of GPT Models

- GPT models revolutionized natural language generation by producing text that is highly coherent, contextually relevant, and flexible across diverse applications.

- They enabled a wide range of industries to automate content creation, from customer support and marketing to software coding and education.

- Their ability to generalize across tasks reduced the need for task-specific datasets, accelerating AI adoption and innovation.

- The scalability of GPT models propelled AI research and deployment into new frontiers in natural language understanding.

DALL-E and Midjourney for Image Generation (2021–2022)

- DALL-E: OpenAI’s breakthrough text-to-image model that synthesizes complex images based on descriptive prompts, showcasing the potential for AI-driven creativity in visual arts.

- Midjourney: An AI image-generation platform gaining widespread popularity due to its ability to produce striking, artistically styled images, appealing to designers, artists, and marketers.

- Both tools brought generative AI’s creativity to the masses, democratizing access to building novel visual content without traditional artistic skills.

Stable Diffusion’s Role in Open Source Evolution

- Stable Diffusion emerged as an open-source diffusion-based generative image model, allowing developers, researchers, and enthusiasts to freely use, modify, and deploy the technology.

- It sparked a vast ecosystem of AI art applications, plugins, and creative tools, fostering innovation and collaboration beyond corporate research labs.

- It helped balance commercial and community interests by promoting accessibility and transparency in generative AI technologies.

Impact on Creative Industries and Business

- Generative AI reshaped creative workflows by automating image, text, video, and audio production, significantly reducing time and resource costs.

- Businesses leveraged these tools for personalized marketing, rapid prototyping, media generation, and customer interaction enhancements.

- Creative professionals used generative AI as collaborators, expanding artistic possibilities, and redefining creative boundaries.

- It provoked discussions on copyright, ethical use, and the future role of human creativity in AI’s age.

Key Figures and Influencers in Generative AI

Ian Goodfellow—GANs Inventor and DeepMind Scientist

- Invented Generative Adversarial Networks (GANs) in 2014, a groundbreaking architecture that revolutionized generative AI by introducing adversarial training.

- His work enabled the creation of highly realistic synthetic images, videos, and other data, rapidly advancing the field.

- Goodfellow’s contributions extend beyond GANs into AI security and understanding model vulnerabilities.

- Affiliated with leading AI organizations such as Google Brain and DeepMind, influencing both academia and industry innovation.

Fei-Fei Li—ImageNet and Human-Centered AI Advocate

- Led the creation of ImageNet, a large-scale dataset crucial for training and benchmarking deep learning models in computer vision.

- Instrumental in enabling breakthroughs in visual generative AI by providing the data foundation for image recognition and generation models.

- Advocates for AI that focuses on ethical, responsible, and human-centered design.

- Co-director of Stanford’s Human-Centered AI Institute, promoting diverse, interdisciplinary AI research.

Andrew Ng—Pioneer in AI Education and DeepLearning.AI Founder

- Renowned for accessible, high-impact AI education through platforms like Coursera, significantly expanding AI literacy worldwide.

- Founder of DeepLearning.AI, democratizing deep learning education and fostering a new generation of AI practitioners.

- Co-founded Google Brain and led AI projects at Baidu, driving practical AI and deep learning applications.

- Promotes AI’s beneficial use across industries and emphasizes reskilling to prepare the workforce for AI-integrated futures.

Other Influencers Shaping Generative AI Research and Popularization

- Yann LeCun: Pioneer of convolutional neural networks and a key figure in deep learning and generative AI research as Facebook AI chief scientist.

- Daphne Koller: A leader in probabilistic graphical models and AI education, co-founder of Coursera, and advocate of AI in healthcare.

- Ruslan Salakhutdinov: AI researcher known for advances in unsupervised and generative models.

- Sam Altman: CEO of OpenAI, driving the commercial development and deployment of leading generative AI models like GPT-4.

- Demis Hassabis: CEO of DeepMind, pushing AI research boundaries including generative AI capabilities.

Recent Trends and Innovations in Generative AI (2023–2025)

Emergence of Agentic AI (Autonomous Task Agents)

- Development of AI agents capable of autonomously performing complex sequences of tasks with minimal human intervention.

- These agents integrate generative models with planning, reasoning, and interaction capabilities, fostering more practical and independent AI assistants.

- Used in customer service, autonomous research, content creation, and software automation, expanding generative AI’s functional scope.

Retrieval-Augmented Generation (RAG) to Reduce Hallucination Problems

- RAG combines generative models with external knowledge retrieval, grounding AI outputs in verified, up-to-date information.

- This approach significantly enhances factual accuracy and relevance while curbing hallucinations—where generative models produce misleading or false content.

- Widely adopted in enterprise AI solutions for trustworthy insights, knowledge base querying, and decision support systems.

Cost Reduction and Speed Improvements in Deploying LLMs

- Advances in model architecture, quantization, pruning, and hardware acceleration have drastically lowered computational costs.

- Techniques such as parameter-efficient fine-tuning enable faster, cheaper custom model deployments without large-scale retraining.

- Enables broader adoption in small-to-medium enterprises and edge devices, democratizing access to generative AI capabilities.

Real-world Enterprise Adoption and Practical Applications

- Generative AI integrated into diverse industries including finance, healthcare, marketing, legal, and manufacturing for automation, personalization, and innovation.

- Use cases include automated report generation, medical diagnostics support, personalized marketing content, code generation, and design prototyping.

- Hybrid human-AI workflows enhance productivity while maintaining quality control and ethical oversight.

AI Governance and Regulation Moves in 2023 and Beyond

- Governments and organizations worldwide have advanced regulatory frameworks focusing on transparency, accountability, and ethical use of generative AI.

- Initiatives include data privacy protections, bias mitigation mandates, intellectual property guidelines, and safety standards.

- Collaboration across policymakers, researchers, and industry encourages responsible AI adoption balancing innovation with societal concerns.

Societal Impact and Ethical Considerations

How Generative AI is Transforming Jobs and Creativity

- Automation of routine tasks is reshaping job roles, augmenting human workers rather than fully replacing them in many cases.

- Generative AI tools enhance creativity by providing new ways to brainstorm, design, write, and produce content faster.

- New career opportunities are emerging in AI development, prompt engineering, and AI-assisted creative industries.

- Potential displacement risks in certain sectors necessitate reskilling and workforce adaption.

Copyright and Intellectual Property Issues with AI-Generated Content

- Legal ambiguity exists around ownership of AI-created works, as many models learn from copyrighted data without explicit consent.

- Questions arise over fair use, derivative works, and the rights of creators whose data trained these models.

- Courts and policymakers worldwide are debating frameworks to balance innovation with protection of original content creators.

- Emerging solutions include watermarking AI-generated content and attribution guidelines.

Ethical Frameworks and Responsible AI Practices

- Emphasis on developing transparent, explainable AI systems to ensure accountability and user trust.

- Guidelines focus on minimizing bias, ensuring fairness, and safeguarding privacy in training data and outputs.

- Responsible AI involves ongoing monitoring to prevent misuse, misinformation, and harmful content generation.

- Industry collaboration on best practices and self-regulation is critical to ethical AI deployment.

Government and Organizational Initiatives on AI Ethics

- Many governments have launched AI ethics councils and regulatory bodies to oversee safe AI integration.

- International collaborations such as OECD AI Principles advocate for human-centered, trustworthy AI development.

- Organizations like the Partnership on AI and IEEE are pioneering frameworks for ethical AI standards.

- Policy efforts prioritize balancing innovation incentives with societal safeguards around transparency, safety, and equity.

The Future of Generative AI

Predictions about AI in 2030

- AI models will become vastly more capable, approaching human-level general intelligence in many domains.

- Generative AI will enable seamless human-AI collaboration, acting as creative partners and intelligent assistants.

- Real-time, context-aware content generation will be mainstream across industries, from entertainment to education and healthcare.

- AI will evolve toward more autonomous decision-making systems with improved interpretability and safety.

Integration with Other Emerging Technologies

- Augmented Reality (AR) and Virtual Reality (VR): Generative AI will create dynamic, personalized immersive experiences, environments, and avatars.

- Internet of Things (IoT): AI-driven generative models will synthesize data from connected devices to generate predictive analytics and automated responses.

- Edge Computing: Smaller, energy-efficient generative models will operate locally on devices, improving privacy and responsiveness.

- Quantum Computing: Potential to exponentially accelerate AI training and inference, unlocking new generative possibilities.

Ongoing Challenges: Bias, Scalability, and Trust

- Addressing inherent biases in training data remains critical to ensuring fair and equitable AI outcomes.

- Scaling models sustainably to handle increasing data volumes, complexity, and user demands while reducing energy consumption.

- Building user trust through transparency, explainability, and robust safeguards against misuse and inaccurate content generation.

- Legal and ethical frameworks must evolve in parallel to manage new risks and societal impacts.

Potential to Revolutionize Various Domains

- Healthcare: Accelerated drug discovery, personalized treatment plans, and realistic medical simulations.

- Education: Tailored learning experiences with AI-generated teaching materials and tutoring.

- Creative Arts: New art forms combining human and AI creativity in music, literature, design, and film.

- Business and Manufacturing: Automated content generation, process optimization, and predictive maintenance.

- Data Science and Research: Generative models aiding hypothesis generation, simulations, and data synthesis.

Frequently Asked Questions (FAQs)

History and Evolution of Generative AI

1. What is generative AI?

2. When did generative AI start?

3. Who invented generative adversarial networks (GANs)?

4. What technologies influence generative AI?

Key technologies include neural networks, transformers, GANs, VAEs, and diffusion models.

5. What is the significance of GPT-3?

6. How does generative AI impact creative industries?

7. What is ELIZA in AI history?

8. What role do transformers play in generative AI?

9. Are there any ethical concerns with generative AI?

10. How is generative AI used in Hyderabad?

11. What is Retrieval-Augmented Generation?

12. What is the future of generative AI?

13. What is a variational autoencoder (VAE)?

A VAE encodes data into a latent space for generating diverse new outputs probabilistically.

14. How does diffusion modeling work?

15. Which model introduced the attention mechanism?

16. How does GPT-4 differ from previous versions?

17. What are some open source generative AI tools?

Stable Diffusion is a popular open source image generation model.

18. How can beginners start learning generative AI?

19. What jobs are emerging due to generative AI?

20. What limitations did early AI have?

21. Why is AI trust important?

22. How is generative AI used in marketing?

23. What is agentic AI?

24. What is the importance of ImageNet?

25. How is copyright affected by AI-generated content?

26. What is prompt engineering?

27. How do enterprises benefit from generative AI?

28. What is the AI winter?

29. What skills help in a generative AI career?

30. How do governments regulate generative AI?